Note

Go to the end to download the full example code.

Ab-initio Pipeline Demonstration¶

This tutorial demonstrates some key components of an ab-initio

reconstruction pipeline using synthetic data generated with ASPIRE’s

Simulation class of objects.

Download an Example Volume¶

We begin by downloading a high resolution volume map of the 80S Ribosome, sourced from EMDB: https://www.ebi.ac.uk/emdb/EMD-2660. This is one of several volume maps that can be downloaded with ASPIRE’s data downloading utility by using the following import.

from aspire.downloader import emdb_2660

# Load 80s Ribosome as a ``Volume`` object.

original_vol = emdb_2660()

# During the preprocessing stages of the pipeline we will downsample

# the images to an image size of 64 pixels. Here, we also downsample the

# volume so we can compare to our reconstruction later.

res = 64

vol_ds = original_vol.downsample(res)

Note

A Volume can be saved using the Volume.save() method as follows:

fn = f"downsampled_80s_ribosome_size{res}.mrc"

vol_ds.save(fn, overwrite=True)

Create a Simulation Source¶

ASPIRE’s Simulation class can be used to generate a synthetic

dataset of projection images. A Simulation object produces

random projections of a supplied Volume and applies noise and CTF

filters. The resulting stack of 2D images is stored in an Image

object.

CTF Filters¶

Let’s start by creating CTF filters. The operators package

contains a collection of filter classes that can be supplied to a

Simulation. We use RadialCTFFilter to generate a set of CTF

filters with various defocus values.

# Create CTF filters

import numpy as np

from aspire.operators import RadialCTFFilter

# Radial CTF Filter

defocus_min = 15000 # unit is angstroms

defocus_max = 25000

defocus_ct = 7

ctf_filters = [

RadialCTFFilter(defocus=d)

for d in np.linspace(defocus_min, defocus_max, defocus_ct)

]

Initialize Simulation Object¶

We feed our Volume and filters into Simulation to generate

the dataset of images. When controlled white Gaussian noise is

desired, WhiteNoiseAdder(var=VAR) can be used to generate a

simulation data set around a specific noise variance.

Alternatively, users can bring their own images using an

ArrayImageSource, or define their own custom noise functions via

Simulation(..., noise_adder=CustomNoiseAdder(...)). Examples

can be found in tutorials/class_averaging.py and

experiments/simulated_abinitio_pipeline.py.

from aspire.noise import WhiteNoiseAdder

from aspire.source import Simulation

# For this ``Simulation`` we set all 2D offset vectors to zero,

# but by default offset vectors will be randomly distributed.

# We cache the Simulation to prevent regenerating the projections

# for each preprocessing stage.

src = Simulation(

n=2500, # number of projections

vols=original_vol, # volume source

offsets=0, # Default: images are randomly shifted

unique_filters=ctf_filters,

noise_adder=WhiteNoiseAdder(var=0.0002), # desired noise variance

).cache()

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:38<02:35, 38.86s/it]

40%|████ | 2/5 [01:17<01:56, 38.95s/it]

60%|██████ | 3/5 [01:57<01:18, 39.17s/it]

80%|████████ | 4/5 [02:36<00:39, 39.08s/it]

100%|██████████| 5/5 [03:11<00:00, 37.53s/it]

100%|██████████| 5/5 [03:11<00:00, 38.20s/it]

Note

The noise variance value above was chosen based on other parameters for this quick tutorial, and can be changed to adjust the power of the additive noise. Alternatively, an SNR value can be prescribed as follows:

Simulation(..., noise_adder=WhiteNoiseAdder.from_snr(SNR))

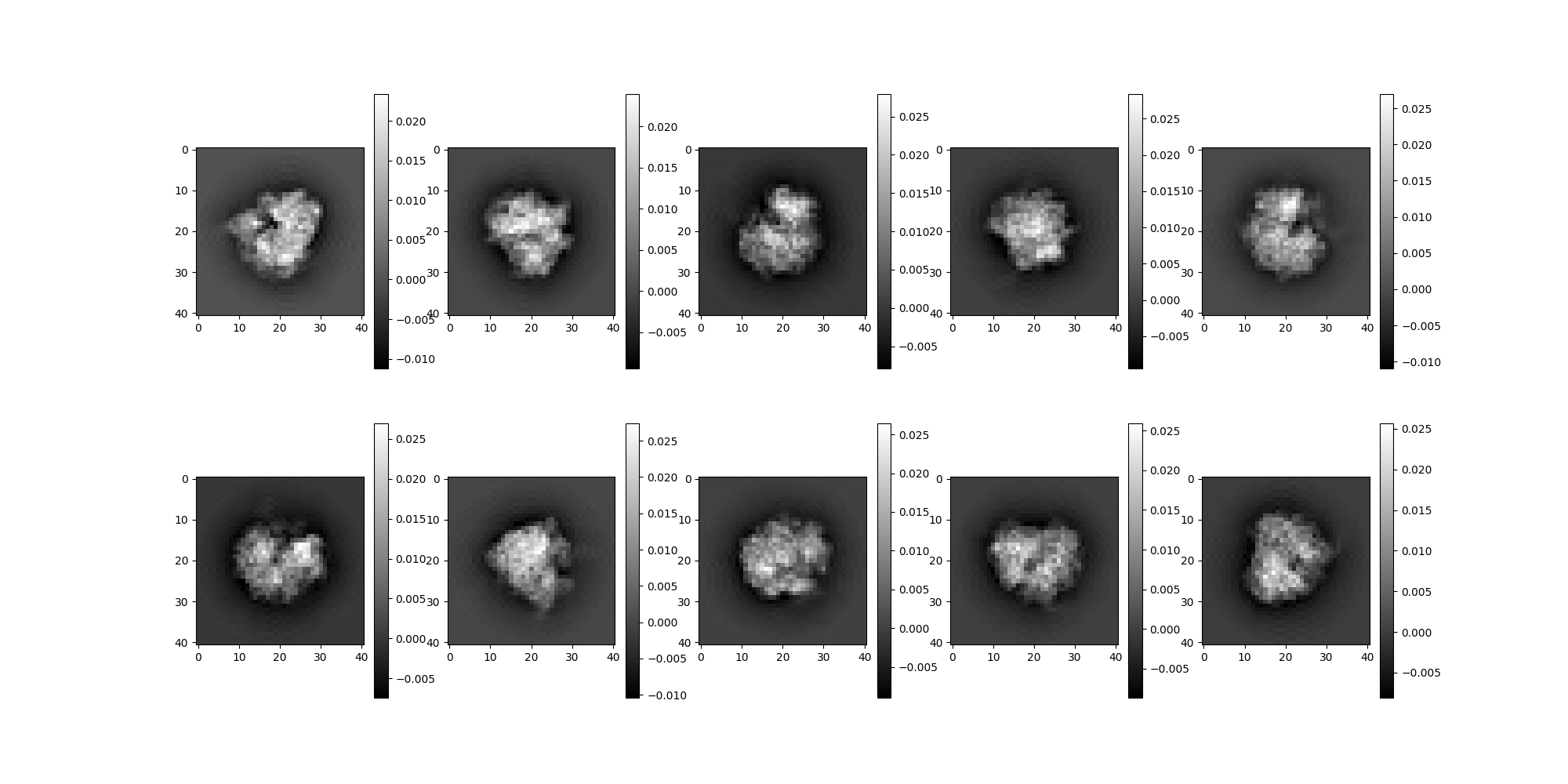

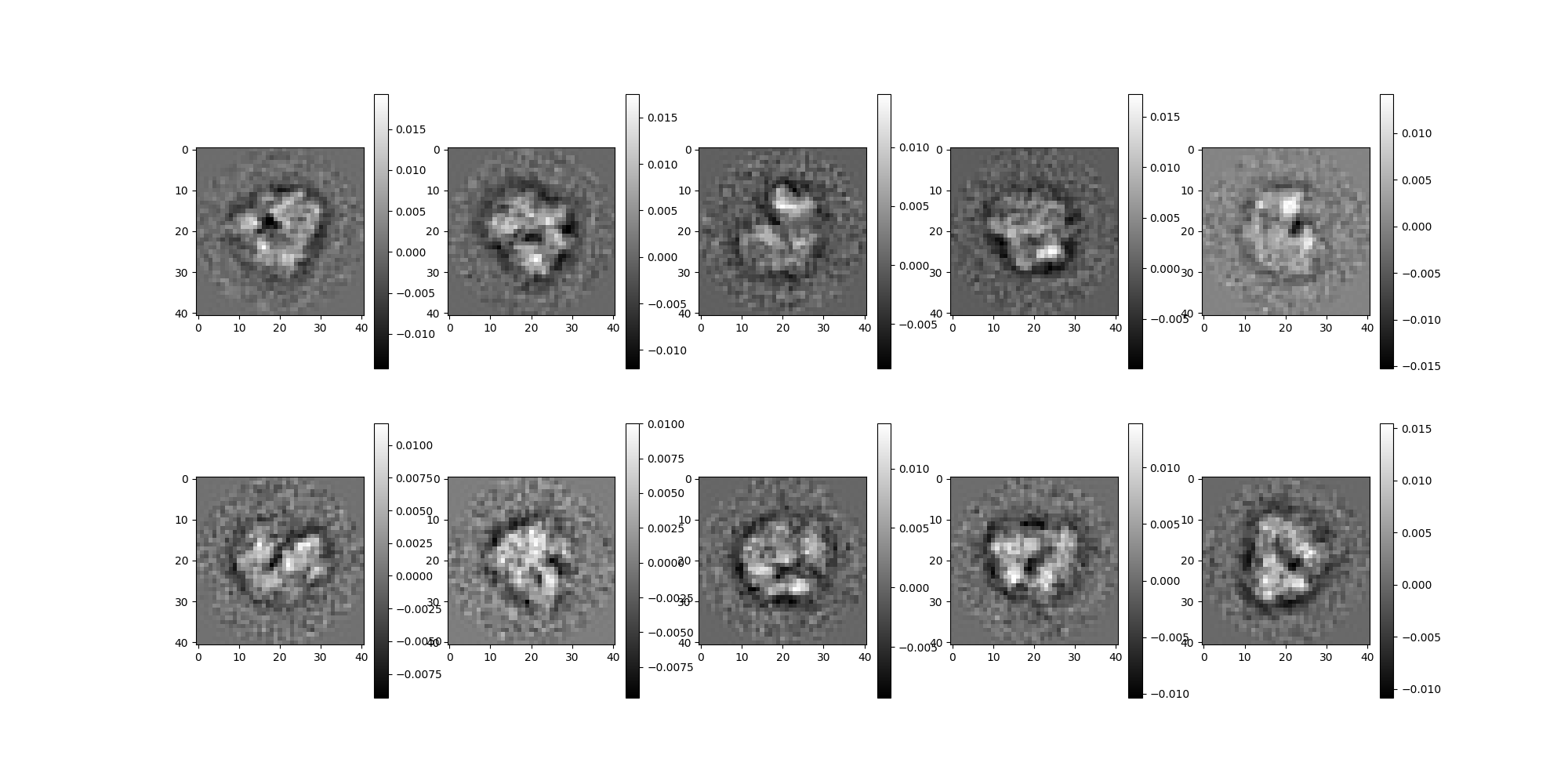

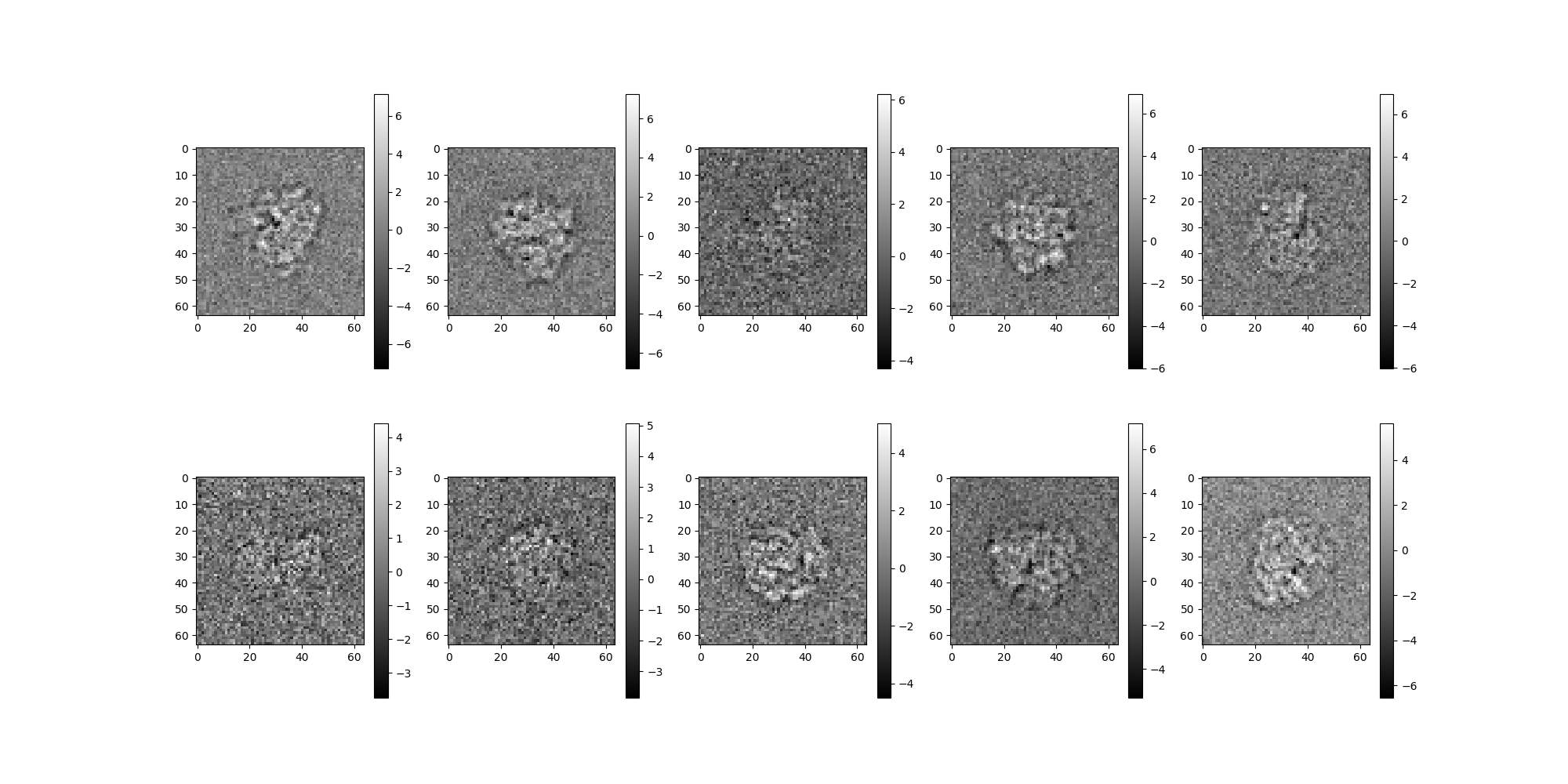

Several Views of the Projection Images¶

We can access several views of the projection images.

# with no corruption applied

src.projections[0:10].show()

# with no noise corruption

src.clean_images[0:10].show()

# with noise and CTF corruption

src.images[0:10].show()

Image Preprocessing¶

We apply some image preprocessing techniques to prepare the the images for denoising via Class Averaging.

Downsampling¶

We downsample the images. Reducing the image size will improve the efficiency of subsequent pipeline stages. Metadata such as pixel size is scaled accordingly to correspond correctly with the image resolution.

src = src.downsample(res)

src.images[:10].show()

CTF Correction¶

We apply phase_flip() to correct for CTF effects.

src = src.phase_flip()

src.images[:10].show()

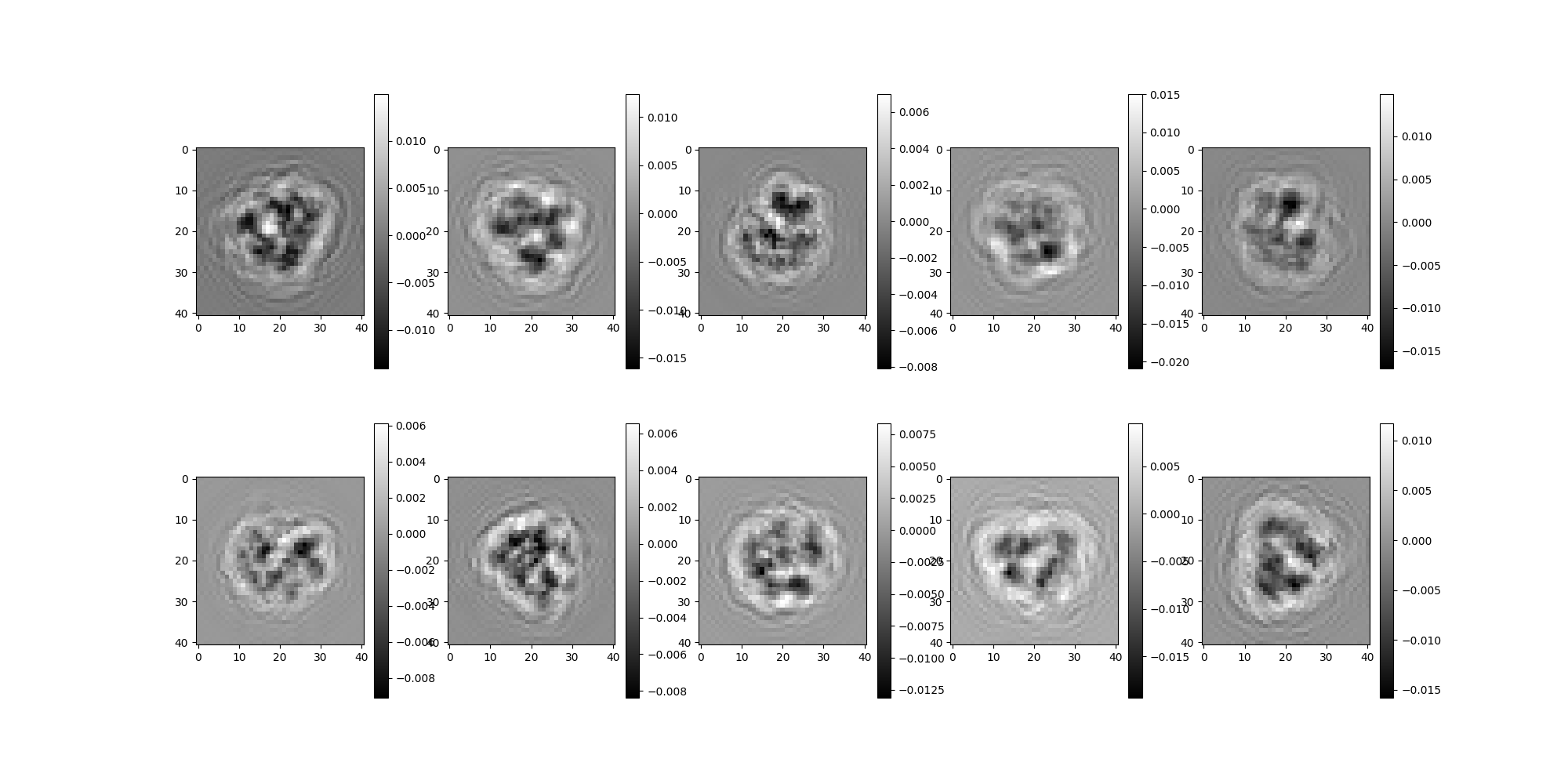

Normalize Background¶

We apply normalize_background() to prepare the image class averaging.

src = src.normalize_background()

src.images[:10].show()

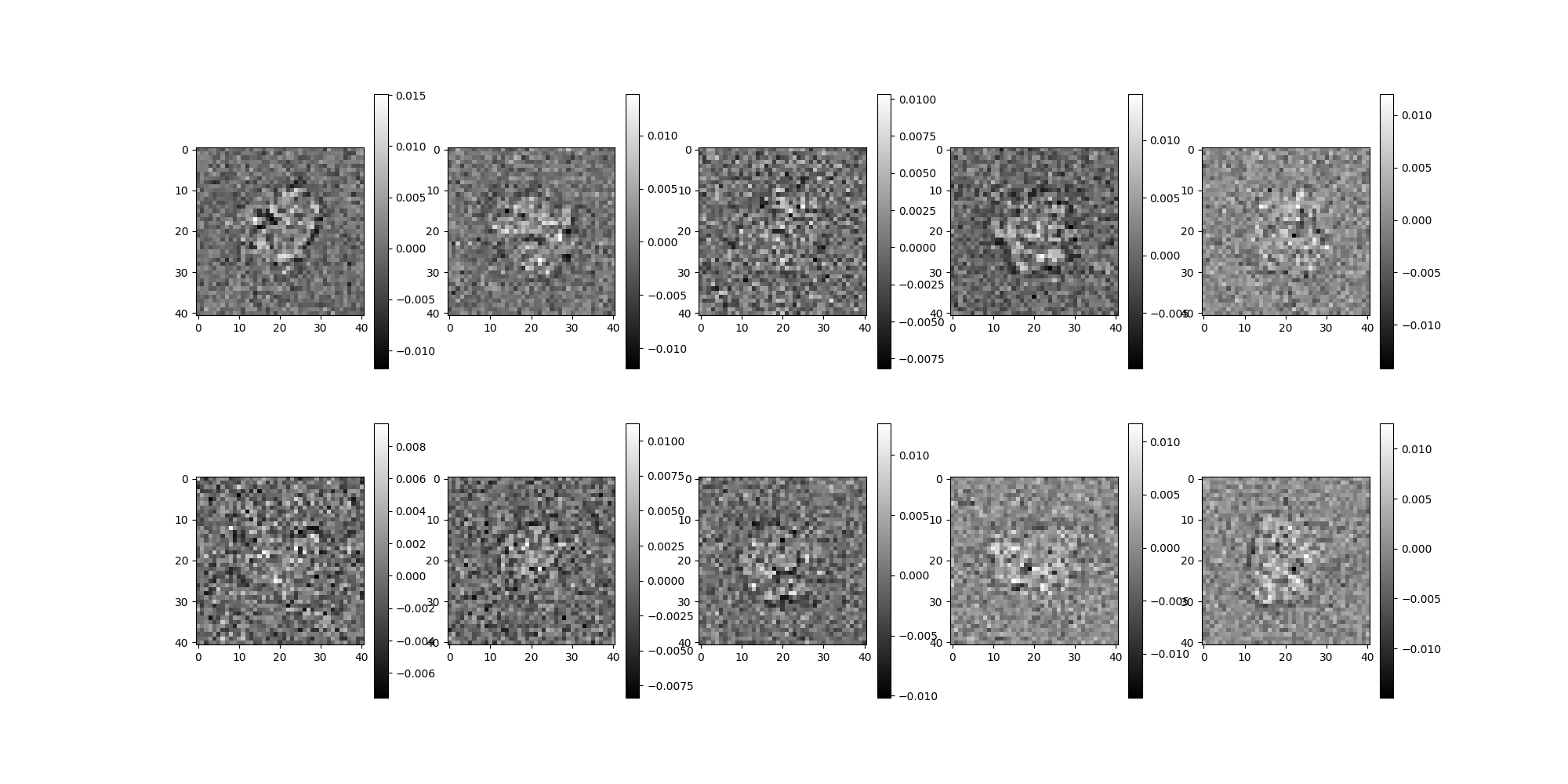

Noise Whitening¶

We apply whiten() to estimate and whiten the noise.

src = src.whiten()

src.images[:10].show()

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:03, 1.17it/s]

40%|████ | 2/5 [00:01<00:02, 1.21it/s]

60%|██████ | 3/5 [00:02<00:01, 1.19it/s]

80%|████████ | 4/5 [00:03<00:00, 1.20it/s]

100%|██████████| 5/5 [00:04<00:00, 1.25it/s]

100%|██████████| 5/5 [00:04<00:00, 1.22it/s]

Contrast Inversion¶

We apply invert_contrast() to ensure a positive valued signal.

src = src.invert_contrast()

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:03, 1.04it/s]

40%|████ | 2/5 [00:01<00:02, 1.04it/s]

60%|██████ | 3/5 [00:02<00:01, 1.04it/s]

80%|████████ | 4/5 [00:03<00:00, 1.04it/s]

100%|██████████| 5/5 [00:04<00:00, 1.10it/s]

100%|██████████| 5/5 [00:04<00:00, 1.07it/s]

Caching¶

We apply cache to store the results of the ImageSource

pipeline up to this point. This is optional, but can provide

benefit when used intently on machines with adequate memory.

src = src.cache()

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:03, 1.05it/s]

40%|████ | 2/5 [00:01<00:02, 1.06it/s]

60%|██████ | 3/5 [00:02<00:01, 1.07it/s]

80%|████████ | 4/5 [00:03<00:00, 1.06it/s]

100%|██████████| 5/5 [00:04<00:00, 1.11it/s]

100%|██████████| 5/5 [00:04<00:00, 1.09it/s]

Class Averaging¶

For this tutorial we use the DebugClassAvgSource to generate an ImageSource

of class averages. Internally, DebugClassAvgSource uses the RIRClass2D

object to classify the source images via the rotationally invariant representation

(RIR) algorithm and the TopClassSelector object to select the first n_classes

images in the original order from the source. In practice, class selection is commonly

done by sorting class averages based on some configurable notion of quality

(contrast, neighbor distance etc) which can be accomplished by providing a custom

class selector to ClassAverageSource, which changes the ordering of the classes

returned by ClassAverageSource.

from aspire.denoising import DebugClassAvgSource

avgs = DebugClassAvgSource(src=src)

# We'll continue our pipeline using only the first ``n_classes`` from

# ``avgs``. The ``cache()`` call is used here to precompute results

# for the ``:n_classes`` slice. This avoids recomputing the same

# images twice when peeking in the next cell then requesting them in

# the following ``CLSyncVoting`` algorithm. Outside of demonstration

# purposes, where we are repeatedly peeking at various stage results,

# such caching can be dropped allowing for more lazy evaluation.

n_classes = 250

avgs = avgs[:n_classes].cache()

0%| | 0/1 [00:00<?, ?it/s]

0%| | 0/5 [00:00<?, ?it/s]

100%|██████████| 5/5 [00:00<00:00, 189.32it/s]

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:00, 7.53it/s]

40%|████ | 2/5 [00:00<00:00, 7.67it/s]

60%|██████ | 3/5 [00:00<00:00, 7.80it/s]

80%|████████ | 4/5 [00:00<00:00, 7.77it/s]

100%|██████████| 5/5 [00:00<00:00, 8.12it/s]

100%|██████████| 5/5 [00:00<00:00, 7.94it/s]

Rotationally aligning classes: 0%| | 0/250 [00:00<?, ?it/s]

Rotationally aligning classes: 1%| | 2/250 [00:00<00:16, 15.15it/s]

Rotationally aligning classes: 2%|▏ | 4/250 [00:00<00:21, 11.58it/s]

Rotationally aligning classes: 2%|▏ | 6/250 [00:00<00:23, 10.34it/s]

Rotationally aligning classes: 3%|▎ | 8/250 [00:00<00:24, 9.86it/s]

Rotationally aligning classes: 4%|▍ | 10/250 [00:00<00:24, 9.69it/s]

Rotationally aligning classes: 4%|▍ | 11/250 [00:01<00:24, 9.73it/s]

Rotationally aligning classes: 5%|▍ | 12/250 [00:01<00:24, 9.76it/s]

Rotationally aligning classes: 5%|▌ | 13/250 [00:01<00:24, 9.80it/s]

Rotationally aligning classes: 6%|▌ | 15/250 [00:01<00:22, 10.30it/s]

Rotationally aligning classes: 7%|▋ | 17/250 [00:01<00:22, 10.40it/s]

Rotationally aligning classes: 8%|▊ | 19/250 [00:01<00:21, 10.63it/s]

Rotationally aligning classes: 8%|▊ | 21/250 [00:02<00:21, 10.86it/s]

Rotationally aligning classes: 9%|▉ | 23/250 [00:02<00:19, 11.48it/s]

Rotationally aligning classes: 10%|█ | 25/250 [00:02<00:20, 10.76it/s]

Rotationally aligning classes: 11%|█ | 27/250 [00:02<00:21, 10.57it/s]

Rotationally aligning classes: 12%|█▏ | 29/250 [00:02<00:21, 10.25it/s]

Rotationally aligning classes: 12%|█▏ | 31/250 [00:02<00:22, 9.91it/s]

Rotationally aligning classes: 13%|█▎ | 33/250 [00:03<00:22, 9.70it/s]

Rotationally aligning classes: 14%|█▎ | 34/250 [00:03<00:22, 9.63it/s]

Rotationally aligning classes: 14%|█▍ | 35/250 [00:03<00:22, 9.38it/s]

Rotationally aligning classes: 15%|█▍ | 37/250 [00:03<00:21, 9.88it/s]

Rotationally aligning classes: 16%|█▌ | 39/250 [00:03<00:20, 10.06it/s]

Rotationally aligning classes: 16%|█▋ | 41/250 [00:03<00:20, 10.28it/s]

Rotationally aligning classes: 17%|█▋ | 43/250 [00:04<00:18, 11.03it/s]

Rotationally aligning classes: 18%|█▊ | 45/250 [00:04<00:18, 10.82it/s]

Rotationally aligning classes: 19%|█▉ | 47/250 [00:04<00:18, 10.77it/s]

Rotationally aligning classes: 20%|█▉ | 49/250 [00:04<00:18, 11.13it/s]

Rotationally aligning classes: 20%|██ | 51/250 [00:04<00:18, 10.79it/s]

Rotationally aligning classes: 21%|██ | 53/250 [00:05<00:17, 11.14it/s]

Rotationally aligning classes: 22%|██▏ | 55/250 [00:05<00:17, 10.91it/s]

Rotationally aligning classes: 23%|██▎ | 57/250 [00:05<00:18, 10.64it/s]

Rotationally aligning classes: 24%|██▎ | 59/250 [00:05<00:17, 10.94it/s]

Rotationally aligning classes: 24%|██▍ | 61/250 [00:05<00:17, 10.52it/s]

Rotationally aligning classes: 25%|██▌ | 63/250 [00:06<00:17, 10.90it/s]

Rotationally aligning classes: 26%|██▌ | 65/250 [00:06<00:16, 11.25it/s]

Rotationally aligning classes: 27%|██▋ | 67/250 [00:06<00:15, 11.63it/s]

Rotationally aligning classes: 28%|██▊ | 69/250 [00:06<00:15, 11.49it/s]

Rotationally aligning classes: 28%|██▊ | 71/250 [00:06<00:15, 11.58it/s]

Rotationally aligning classes: 29%|██▉ | 73/250 [00:06<00:15, 11.12it/s]

Rotationally aligning classes: 30%|███ | 75/250 [00:07<00:15, 11.00it/s]

Rotationally aligning classes: 31%|███ | 77/250 [00:07<00:16, 10.69it/s]

Rotationally aligning classes: 32%|███▏ | 79/250 [00:07<00:15, 10.81it/s]

Rotationally aligning classes: 32%|███▏ | 81/250 [00:07<00:15, 11.14it/s]

Rotationally aligning classes: 33%|███▎ | 83/250 [00:07<00:14, 11.38it/s]

Rotationally aligning classes: 34%|███▍ | 85/250 [00:07<00:14, 11.20it/s]

Rotationally aligning classes: 35%|███▍ | 87/250 [00:08<00:14, 11.04it/s]

Rotationally aligning classes: 36%|███▌ | 89/250 [00:08<00:15, 10.67it/s]

Rotationally aligning classes: 36%|███▋ | 91/250 [00:08<00:15, 10.44it/s]

Rotationally aligning classes: 37%|███▋ | 93/250 [00:08<00:14, 10.62it/s]

Rotationally aligning classes: 38%|███▊ | 95/250 [00:08<00:14, 10.68it/s]

Rotationally aligning classes: 39%|███▉ | 97/250 [00:09<00:14, 10.74it/s]

Rotationally aligning classes: 40%|███▉ | 99/250 [00:09<00:14, 10.70it/s]

Rotationally aligning classes: 40%|████ | 101/250 [00:09<00:14, 10.63it/s]

Rotationally aligning classes: 41%|████ | 103/250 [00:09<00:13, 10.88it/s]

Rotationally aligning classes: 42%|████▏ | 105/250 [00:09<00:13, 10.55it/s]

Rotationally aligning classes: 43%|████▎ | 107/250 [00:10<00:13, 10.27it/s]

Rotationally aligning classes: 44%|████▎ | 109/250 [00:10<00:13, 10.22it/s]

Rotationally aligning classes: 44%|████▍ | 111/250 [00:10<00:13, 10.10it/s]

Rotationally aligning classes: 45%|████▌ | 113/250 [00:10<00:13, 10.00it/s]

Rotationally aligning classes: 46%|████▌ | 115/250 [00:10<00:13, 10.14it/s]

Rotationally aligning classes: 47%|████▋ | 117/250 [00:11<00:12, 10.45it/s]

Rotationally aligning classes: 48%|████▊ | 119/250 [00:11<00:12, 10.29it/s]

Rotationally aligning classes: 48%|████▊ | 121/250 [00:11<00:12, 10.28it/s]

Rotationally aligning classes: 49%|████▉ | 123/250 [00:11<00:11, 10.59it/s]

Rotationally aligning classes: 50%|█████ | 125/250 [00:11<00:11, 10.96it/s]

Rotationally aligning classes: 51%|█████ | 127/250 [00:11<00:11, 10.77it/s]

Rotationally aligning classes: 52%|█████▏ | 129/250 [00:12<00:11, 10.36it/s]

Rotationally aligning classes: 52%|█████▏ | 131/250 [00:12<00:11, 10.51it/s]

Rotationally aligning classes: 53%|█████▎ | 133/250 [00:12<00:10, 10.72it/s]

Rotationally aligning classes: 54%|█████▍ | 135/250 [00:12<00:11, 10.39it/s]

Rotationally aligning classes: 55%|█████▍ | 137/250 [00:12<00:10, 10.30it/s]

Rotationally aligning classes: 56%|█████▌ | 139/250 [00:13<00:10, 10.97it/s]

Rotationally aligning classes: 56%|█████▋ | 141/250 [00:13<00:10, 10.52it/s]

Rotationally aligning classes: 57%|█████▋ | 143/250 [00:13<00:09, 10.80it/s]

Rotationally aligning classes: 58%|█████▊ | 145/250 [00:13<00:09, 10.67it/s]

Rotationally aligning classes: 59%|█████▉ | 147/250 [00:13<00:09, 10.41it/s]

Rotationally aligning classes: 60%|█████▉ | 149/250 [00:14<00:09, 11.01it/s]

Rotationally aligning classes: 60%|██████ | 151/250 [00:14<00:09, 10.79it/s]

Rotationally aligning classes: 61%|██████ | 153/250 [00:14<00:08, 10.79it/s]

Rotationally aligning classes: 62%|██████▏ | 155/250 [00:14<00:08, 11.36it/s]

Rotationally aligning classes: 63%|██████▎ | 157/250 [00:14<00:08, 11.21it/s]

Rotationally aligning classes: 64%|██████▎ | 159/250 [00:14<00:08, 11.22it/s]

Rotationally aligning classes: 64%|██████▍ | 161/250 [00:15<00:07, 11.14it/s]

Rotationally aligning classes: 65%|██████▌ | 163/250 [00:15<00:08, 10.35it/s]

Rotationally aligning classes: 66%|██████▌ | 165/250 [00:15<00:07, 10.87it/s]

Rotationally aligning classes: 67%|██████▋ | 167/250 [00:15<00:07, 10.64it/s]

Rotationally aligning classes: 68%|██████▊ | 169/250 [00:15<00:07, 10.39it/s]

Rotationally aligning classes: 68%|██████▊ | 171/250 [00:16<00:07, 10.13it/s]

Rotationally aligning classes: 69%|██████▉ | 173/250 [00:16<00:07, 10.61it/s]

Rotationally aligning classes: 70%|███████ | 175/250 [00:16<00:07, 10.55it/s]

Rotationally aligning classes: 71%|███████ | 177/250 [00:16<00:06, 10.73it/s]

Rotationally aligning classes: 72%|███████▏ | 179/250 [00:16<00:06, 10.44it/s]

Rotationally aligning classes: 72%|███████▏ | 181/250 [00:17<00:06, 10.87it/s]

Rotationally aligning classes: 73%|███████▎ | 183/250 [00:17<00:06, 10.74it/s]

Rotationally aligning classes: 74%|███████▍ | 185/250 [00:17<00:05, 11.02it/s]

Rotationally aligning classes: 75%|███████▍ | 187/250 [00:17<00:06, 10.47it/s]

Rotationally aligning classes: 76%|███████▌ | 189/250 [00:17<00:05, 10.63it/s]

Rotationally aligning classes: 76%|███████▋ | 191/250 [00:17<00:05, 10.45it/s]

Rotationally aligning classes: 77%|███████▋ | 193/250 [00:18<00:05, 11.00it/s]

Rotationally aligning classes: 78%|███████▊ | 195/250 [00:18<00:05, 10.85it/s]

Rotationally aligning classes: 79%|███████▉ | 197/250 [00:18<00:04, 11.16it/s]

Rotationally aligning classes: 80%|███████▉ | 199/250 [00:18<00:04, 10.92it/s]

Rotationally aligning classes: 80%|████████ | 201/250 [00:18<00:04, 10.61it/s]

Rotationally aligning classes: 81%|████████ | 203/250 [00:19<00:04, 10.48it/s]

Rotationally aligning classes: 82%|████████▏ | 205/250 [00:19<00:04, 10.68it/s]

Rotationally aligning classes: 83%|████████▎ | 207/250 [00:19<00:03, 11.03it/s]

Rotationally aligning classes: 84%|████████▎ | 209/250 [00:19<00:03, 11.04it/s]

Rotationally aligning classes: 84%|████████▍ | 211/250 [00:19<00:03, 10.88it/s]

Rotationally aligning classes: 85%|████████▌ | 213/250 [00:19<00:03, 10.60it/s]

Rotationally aligning classes: 86%|████████▌ | 215/250 [00:20<00:03, 10.56it/s]

Rotationally aligning classes: 87%|████████▋ | 217/250 [00:20<00:03, 10.85it/s]

Rotationally aligning classes: 88%|████████▊ | 219/250 [00:20<00:02, 10.59it/s]

Rotationally aligning classes: 88%|████████▊ | 221/250 [00:20<00:02, 10.50it/s]

Rotationally aligning classes: 89%|████████▉ | 223/250 [00:20<00:02, 10.72it/s]

Rotationally aligning classes: 90%|█████████ | 225/250 [00:21<00:02, 10.30it/s]

Rotationally aligning classes: 91%|█████████ | 227/250 [00:21<00:02, 10.63it/s]

Rotationally aligning classes: 92%|█████████▏| 229/250 [00:21<00:01, 11.13it/s]

Rotationally aligning classes: 92%|█████████▏| 231/250 [00:21<00:01, 11.12it/s]

Rotationally aligning classes: 93%|█████████▎| 233/250 [00:21<00:01, 11.66it/s]

Rotationally aligning classes: 94%|█████████▍| 235/250 [00:21<00:01, 11.32it/s]

Rotationally aligning classes: 95%|█████████▍| 237/250 [00:22<00:01, 10.79it/s]

Rotationally aligning classes: 96%|█████████▌| 239/250 [00:22<00:01, 10.29it/s]

Rotationally aligning classes: 96%|█████████▋| 241/250 [00:22<00:00, 10.43it/s]

Rotationally aligning classes: 97%|█████████▋| 243/250 [00:22<00:00, 10.10it/s]

Rotationally aligning classes: 98%|█████████▊| 245/250 [00:23<00:00, 10.17it/s]

Rotationally aligning classes: 99%|█████████▉| 247/250 [00:23<00:00, 10.21it/s]

Rotationally aligning classes: 100%|█████████▉| 249/250 [00:23<00:00, 10.87it/s]

Stacking and evaluating batch of class averages from FFBBasis2D to Cartesian: 0%| | 0/1 [00:00<?, ?it/s]

Stacking batch: 0%| | 0/250 [00:00<?, ?it/s]

Stacking batch: 1%| | 2/250 [00:00<00:13, 18.96it/s]

Stacking batch: 4%|▍ | 11/250 [00:00<00:04, 59.09it/s]

Stacking batch: 8%|▊ | 21/250 [00:00<00:03, 74.47it/s]

Stacking batch: 12%|█▏ | 31/250 [00:00<00:02, 81.36it/s]

Stacking batch: 16%|█▋ | 41/250 [00:00<00:02, 85.41it/s]

Stacking batch: 20%|██ | 51/250 [00:00<00:02, 88.34it/s]

Stacking batch: 24%|██▍ | 61/250 [00:00<00:02, 89.16it/s]

Stacking batch: 28%|██▊ | 71/250 [00:00<00:01, 90.89it/s]

Stacking batch: 32%|███▏ | 81/250 [00:00<00:01, 92.11it/s]

Stacking batch: 36%|███▋ | 91/250 [00:01<00:01, 92.51it/s]

Stacking batch: 40%|████ | 101/250 [00:01<00:01, 93.02it/s]

Stacking batch: 44%|████▍ | 111/250 [00:01<00:01, 93.44it/s]

Stacking batch: 48%|████▊ | 121/250 [00:01<00:01, 93.87it/s]

Stacking batch: 52%|█████▏ | 131/250 [00:01<00:01, 93.53it/s]

Stacking batch: 56%|█████▋ | 141/250 [00:01<00:01, 94.19it/s]

Stacking batch: 60%|██████ | 151/250 [00:01<00:01, 93.83it/s]

Stacking batch: 64%|██████▍ | 161/250 [00:01<00:00, 93.07it/s]

Stacking batch: 68%|██████▊ | 171/250 [00:01<00:00, 93.28it/s]

Stacking batch: 72%|███████▏ | 181/250 [00:02<00:00, 93.37it/s]

Stacking batch: 76%|███████▋ | 191/250 [00:02<00:00, 94.09it/s]

Stacking batch: 80%|████████ | 201/250 [00:02<00:00, 94.23it/s]

Stacking batch: 84%|████████▍ | 211/250 [00:02<00:00, 93.76it/s]

Stacking batch: 88%|████████▊ | 221/250 [00:02<00:00, 92.89it/s]

Stacking batch: 92%|█████████▏| 231/250 [00:02<00:00, 92.03it/s]

Stacking batch: 96%|█████████▋| 241/250 [00:02<00:00, 91.66it/s]

Stacking and evaluating batch of class averages from FFBBasis2D to Cartesian: 100%|██████████| 1/1 [00:02<00:00, 2.96s/it]

100%|██████████| 1/1 [00:37<00:00, 37.68s/it]

100%|██████████| 1/1 [00:37<00:00, 37.68s/it]

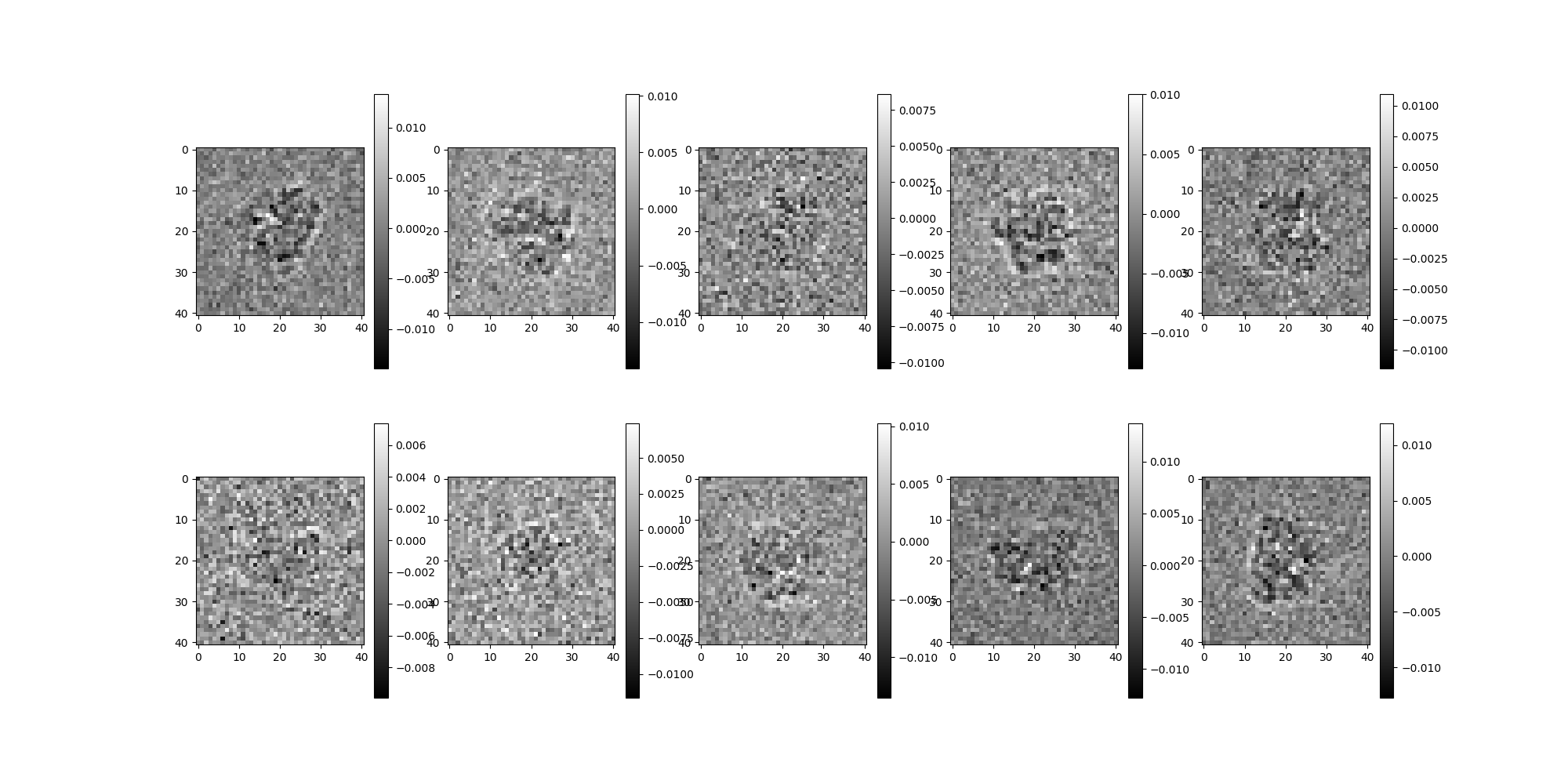

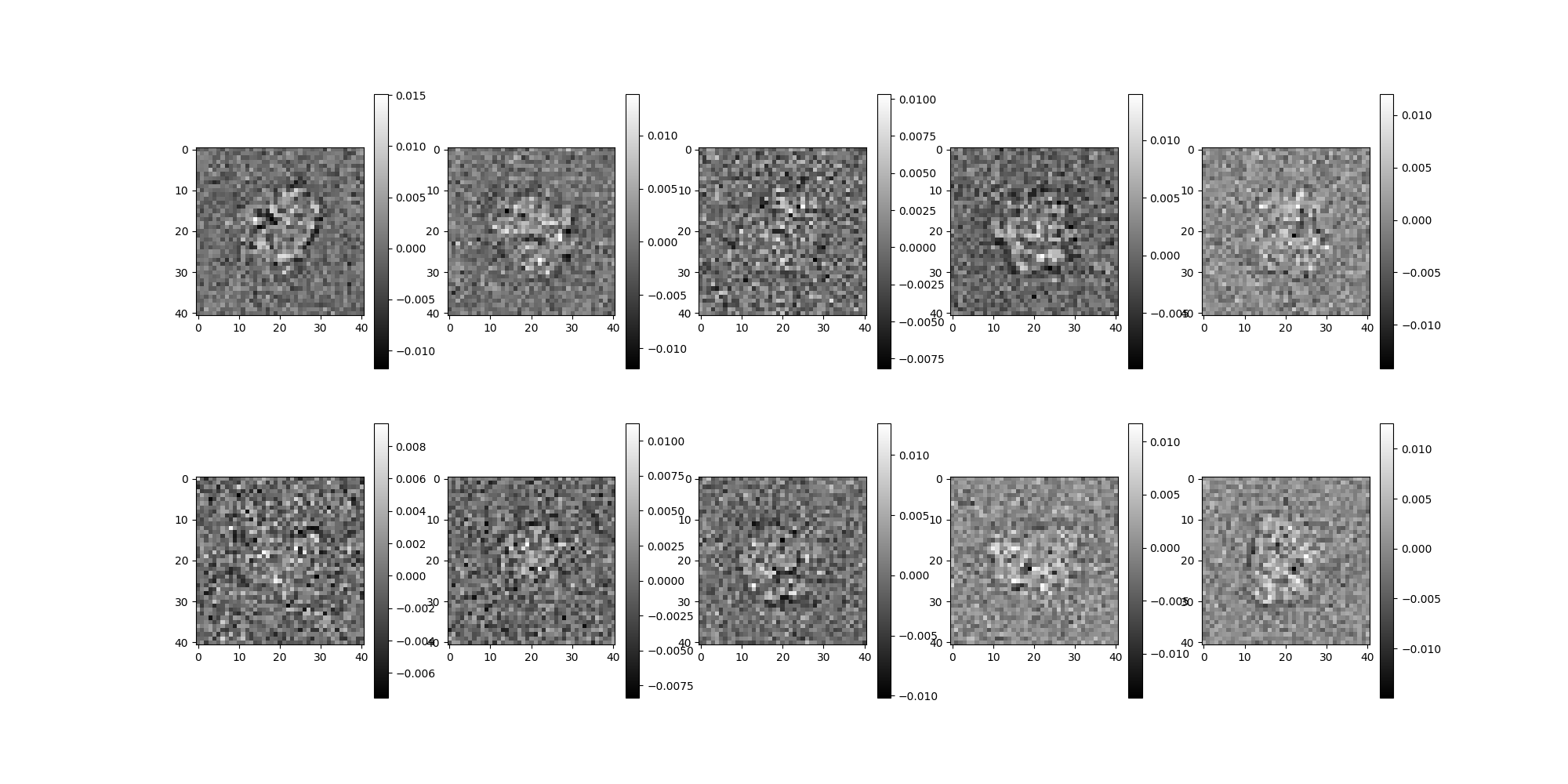

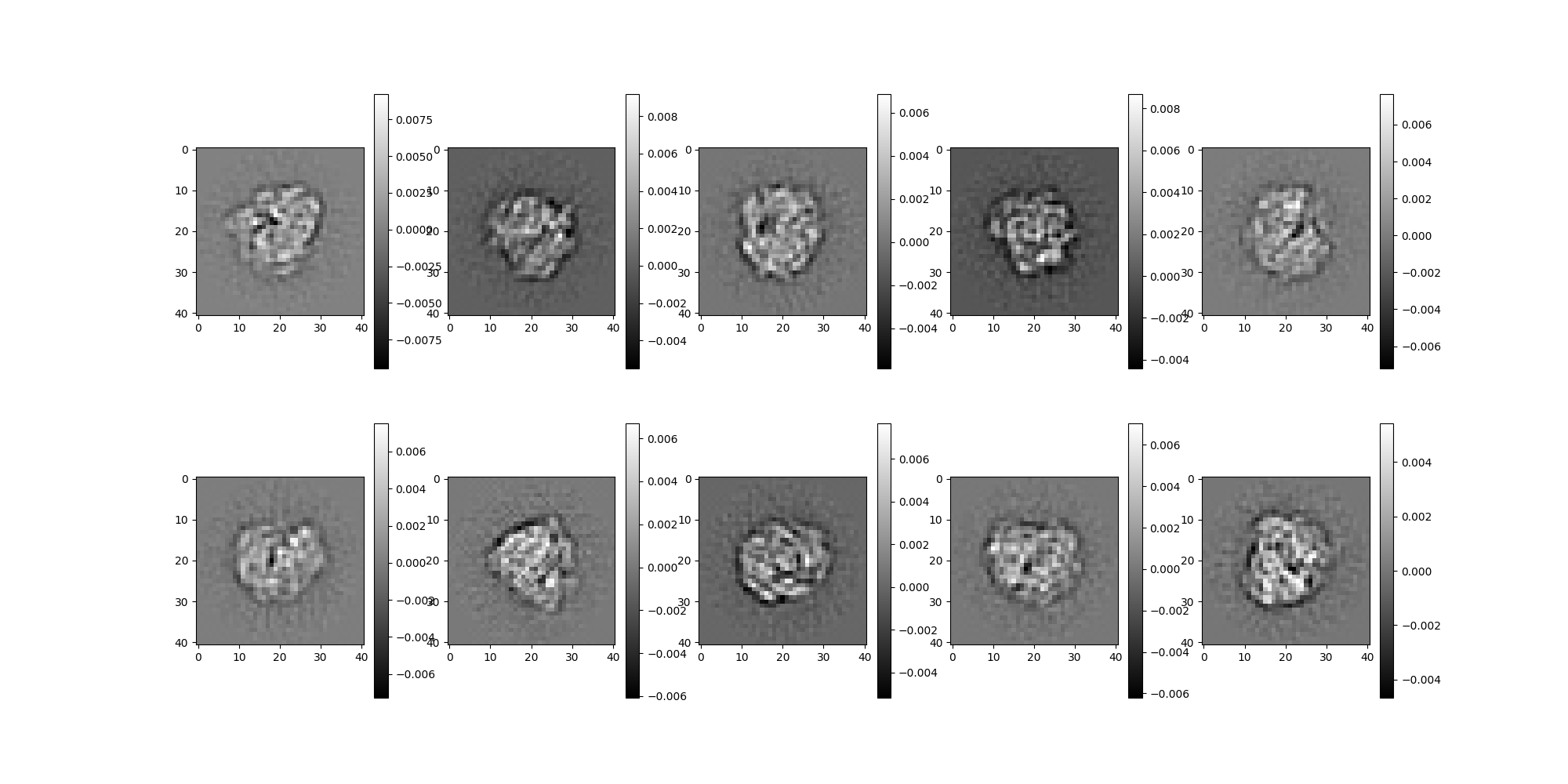

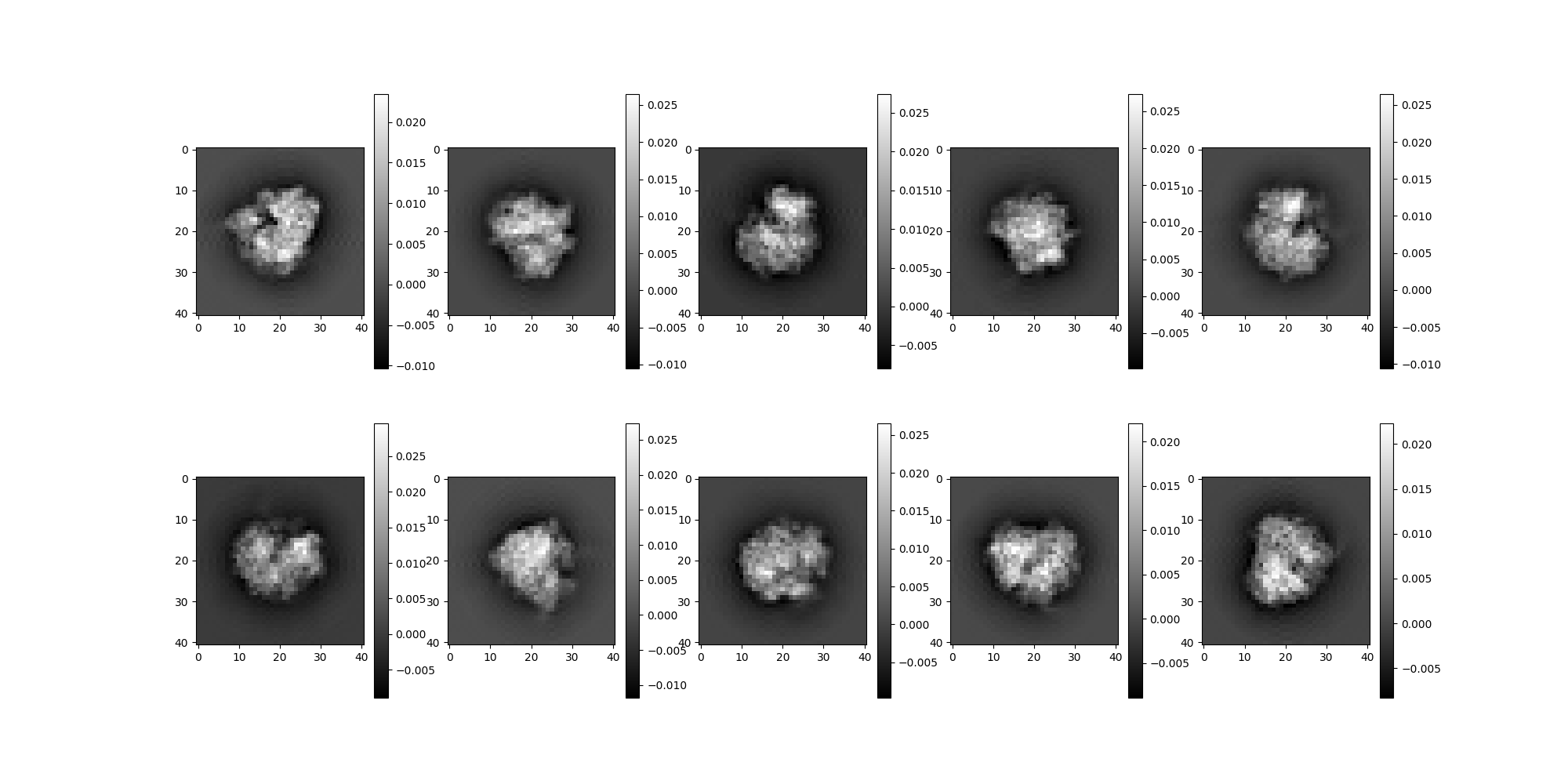

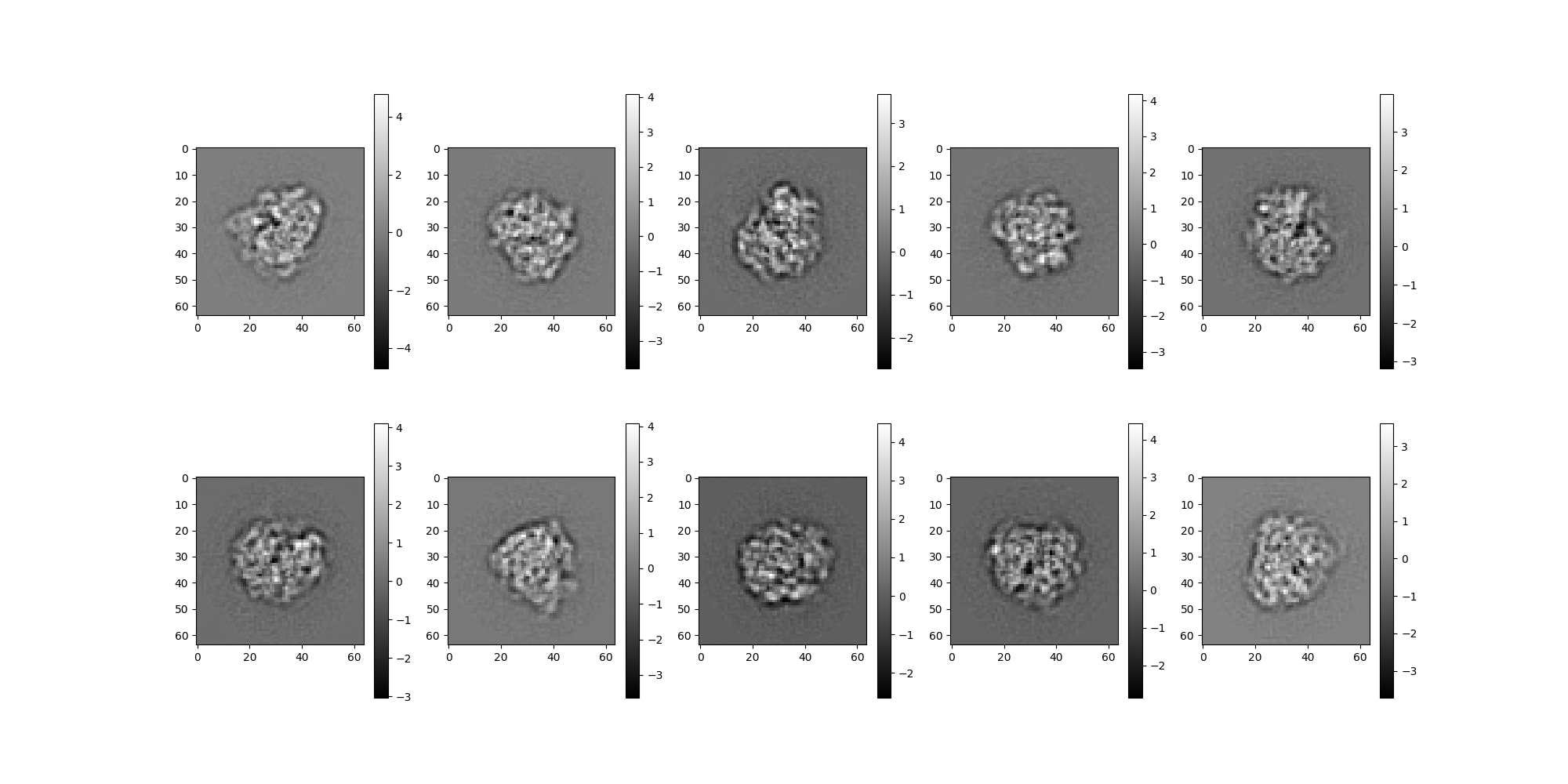

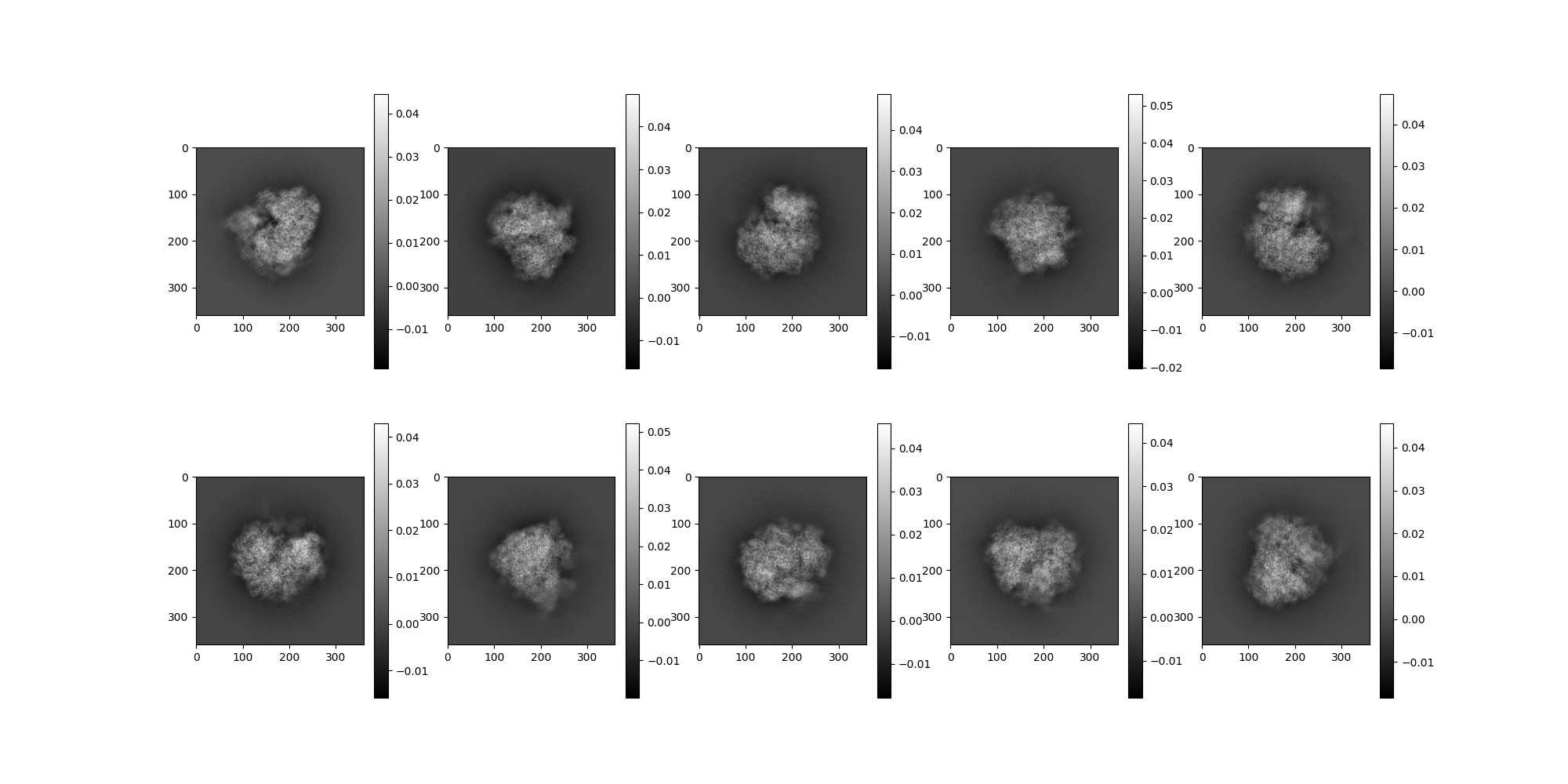

View the Class Averages¶

# Show class averages

avgs.images[0:10].show()

# Show original images corresponding to those classes. This 1:1

# comparison is only expected to work because we used

# ``TopClassSelector`` to classify our images.

src.images[0:10].show()

Orientation Estimation¶

We create an OrientedSource, which consumes an ImageSource object, an

orientation estimator, and returns a new source which lazily estimates orientations.

In this case we supply avgs for our source and a CLSyncVoting

class instance for our orientation estimator. The CLSyncVoting algorithm employs

a common-lines method with synchronization and voting.

from aspire.abinitio import CLSyncVoting

from aspire.source import OrientedSource

from aspire.utils import Rotation

# Stash true rotations for later comparison

true_rotations = Rotation(src.rotations[:n_classes])

# Instantiate a ``CLSyncVoting`` object for estimating orientations.

orient_est = CLSyncVoting(avgs)

# Instantiate an ``OrientedSource``.

oriented_src = OrientedSource(avgs, orient_est)

# Estimate Rotations.

est_rotations = oriented_src.rotations

Searching over common line pairs: 0%| | 0/31125 [00:00<?, ?it/s]

Searching over common line pairs: 0%| | 30/31125 [00:00<01:46, 292.06it/s]

Searching over common line pairs: 0%| | 60/31125 [00:00<01:45, 294.62it/s]

Searching over common line pairs: 0%| | 91/31125 [00:00<01:44, 297.00it/s]

Searching over common line pairs: 0%| | 122/31125 [00:00<01:43, 298.63it/s]

Searching over common line pairs: 0%| | 152/31125 [00:00<01:44, 297.64it/s]

Searching over common line pairs: 1%| | 183/31125 [00:00<01:43, 298.40it/s]

Searching over common line pairs: 1%| | 213/31125 [00:00<01:43, 297.59it/s]

Searching over common line pairs: 1%| | 244/31125 [00:00<01:43, 298.79it/s]

Searching over common line pairs: 1%| | 274/31125 [00:00<01:43, 297.83it/s]

Searching over common line pairs: 1%| | 304/31125 [00:01<01:43, 297.54it/s]

Searching over common line pairs: 1%| | 334/31125 [00:01<01:43, 296.41it/s]

Searching over common line pairs: 1%| | 364/31125 [00:01<01:43, 296.35it/s]

Searching over common line pairs: 1%|▏ | 394/31125 [00:01<01:43, 296.37it/s]

Searching over common line pairs: 1%|▏ | 424/31125 [00:01<01:43, 296.97it/s]

Searching over common line pairs: 1%|▏ | 454/31125 [00:01<01:43, 297.47it/s]

Searching over common line pairs: 2%|▏ | 484/31125 [00:01<01:42, 298.01it/s]

Searching over common line pairs: 2%|▏ | 514/31125 [00:01<01:42, 298.04it/s]

Searching over common line pairs: 2%|▏ | 545/31125 [00:01<01:42, 299.19it/s]

Searching over common line pairs: 2%|▏ | 576/31125 [00:01<01:41, 300.75it/s]

Searching over common line pairs: 2%|▏ | 607/31125 [00:02<01:41, 300.44it/s]

Searching over common line pairs: 2%|▏ | 638/31125 [00:02<01:42, 298.22it/s]

Searching over common line pairs: 2%|▏ | 669/31125 [00:02<01:41, 298.87it/s]

Searching over common line pairs: 2%|▏ | 700/31125 [00:02<01:41, 299.89it/s]

Searching over common line pairs: 2%|▏ | 730/31125 [00:02<01:41, 299.82it/s]

Searching over common line pairs: 2%|▏ | 760/31125 [00:02<01:41, 299.85it/s]

Searching over common line pairs: 3%|▎ | 791/31125 [00:02<01:40, 300.46it/s]

Searching over common line pairs: 3%|▎ | 822/31125 [00:02<01:41, 299.71it/s]

Searching over common line pairs: 3%|▎ | 852/31125 [00:02<01:41, 299.46it/s]

Searching over common line pairs: 3%|▎ | 882/31125 [00:02<01:41, 299.22it/s]

Searching over common line pairs: 3%|▎ | 912/31125 [00:03<01:41, 297.23it/s]

Searching over common line pairs: 3%|▎ | 942/31125 [00:03<01:41, 296.00it/s]

Searching over common line pairs: 3%|▎ | 972/31125 [00:03<01:42, 294.93it/s]

Searching over common line pairs: 3%|▎ | 1002/31125 [00:03<01:41, 295.49it/s]

Searching over common line pairs: 3%|▎ | 1032/31125 [00:03<01:41, 296.45it/s]

Searching over common line pairs: 3%|▎ | 1062/31125 [00:03<01:41, 296.79it/s]

Searching over common line pairs: 4%|▎ | 1092/31125 [00:03<01:41, 296.84it/s]

Searching over common line pairs: 4%|▎ | 1122/31125 [00:03<01:40, 297.44it/s]

Searching over common line pairs: 4%|▎ | 1153/31125 [00:03<01:40, 298.82it/s]

Searching over common line pairs: 4%|▍ | 1184/31125 [00:03<01:39, 300.01it/s]

Searching over common line pairs: 4%|▍ | 1214/31125 [00:04<01:39, 299.37it/s]

Searching over common line pairs: 4%|▍ | 1245/31125 [00:04<01:39, 300.60it/s]

Searching over common line pairs: 4%|▍ | 1276/31125 [00:04<01:39, 301.12it/s]

Searching over common line pairs: 4%|▍ | 1307/31125 [00:04<01:39, 300.28it/s]

Searching over common line pairs: 4%|▍ | 1338/31125 [00:04<01:39, 298.48it/s]

Searching over common line pairs: 4%|▍ | 1368/31125 [00:04<01:39, 298.14it/s]

Searching over common line pairs: 4%|▍ | 1398/31125 [00:04<01:40, 297.13it/s]

Searching over common line pairs: 5%|▍ | 1428/31125 [00:04<01:39, 297.22it/s]

Searching over common line pairs: 5%|▍ | 1458/31125 [00:04<01:40, 296.67it/s]

Searching over common line pairs: 5%|▍ | 1488/31125 [00:04<01:39, 297.17it/s]

Searching over common line pairs: 5%|▍ | 1518/31125 [00:05<01:39, 296.43it/s]

Searching over common line pairs: 5%|▍ | 1548/31125 [00:05<01:39, 296.71it/s]

Searching over common line pairs: 5%|▌ | 1579/31125 [00:05<01:39, 297.80it/s]

Searching over common line pairs: 5%|▌ | 1609/31125 [00:05<01:39, 296.74it/s]

Searching over common line pairs: 5%|▌ | 1639/31125 [00:05<01:39, 296.18it/s]

Searching over common line pairs: 5%|▌ | 1669/31125 [00:05<01:39, 294.62it/s]

Searching over common line pairs: 5%|▌ | 1699/31125 [00:05<01:40, 294.18it/s]

Searching over common line pairs: 6%|▌ | 1729/31125 [00:05<01:39, 294.18it/s]

Searching over common line pairs: 6%|▌ | 1759/31125 [00:05<01:39, 293.99it/s]

Searching over common line pairs: 6%|▌ | 1789/31125 [00:06<01:39, 294.98it/s]

Searching over common line pairs: 6%|▌ | 1819/31125 [00:06<01:39, 295.62it/s]

Searching over common line pairs: 6%|▌ | 1849/31125 [00:06<01:39, 295.25it/s]

Searching over common line pairs: 6%|▌ | 1879/31125 [00:06<01:39, 294.44it/s]

Searching over common line pairs: 6%|▌ | 1909/31125 [00:06<01:39, 294.12it/s]

Searching over common line pairs: 6%|▌ | 1939/31125 [00:06<01:38, 295.09it/s]

Searching over common line pairs: 6%|▋ | 1969/31125 [00:06<01:39, 294.49it/s]

Searching over common line pairs: 6%|▋ | 1999/31125 [00:06<01:39, 293.38it/s]

Searching over common line pairs: 7%|▋ | 2029/31125 [00:06<01:39, 291.99it/s]

Searching over common line pairs: 7%|▋ | 2059/31125 [00:06<01:39, 291.28it/s]

Searching over common line pairs: 7%|▋ | 2089/31125 [00:07<01:39, 291.30it/s]

Searching over common line pairs: 7%|▋ | 2119/31125 [00:07<01:39, 290.99it/s]

Searching over common line pairs: 7%|▋ | 2149/31125 [00:07<01:39, 291.35it/s]

Searching over common line pairs: 7%|▋ | 2179/31125 [00:07<01:39, 291.07it/s]

Searching over common line pairs: 7%|▋ | 2209/31125 [00:07<01:39, 290.06it/s]

Searching over common line pairs: 7%|▋ | 2239/31125 [00:07<01:39, 289.90it/s]

Searching over common line pairs: 7%|▋ | 2269/31125 [00:07<01:39, 290.30it/s]

Searching over common line pairs: 7%|▋ | 2299/31125 [00:07<01:39, 290.66it/s]

Searching over common line pairs: 7%|▋ | 2329/31125 [00:07<01:39, 290.63it/s]

Searching over common line pairs: 8%|▊ | 2359/31125 [00:07<01:39, 290.35it/s]

Searching over common line pairs: 8%|▊ | 2389/31125 [00:08<01:38, 290.55it/s]

Searching over common line pairs: 8%|▊ | 2419/31125 [00:08<01:39, 289.16it/s]

Searching over common line pairs: 8%|▊ | 2448/31125 [00:08<01:39, 289.32it/s]

Searching over common line pairs: 8%|▊ | 2478/31125 [00:08<01:38, 289.68it/s]

Searching over common line pairs: 8%|▊ | 2508/31125 [00:08<01:38, 290.55it/s]

Searching over common line pairs: 8%|▊ | 2538/31125 [00:08<01:38, 289.33it/s]

Searching over common line pairs: 8%|▊ | 2568/31125 [00:08<01:38, 289.59it/s]

Searching over common line pairs: 8%|▊ | 2598/31125 [00:08<01:38, 289.89it/s]

Searching over common line pairs: 8%|▊ | 2627/31125 [00:08<01:38, 289.69it/s]

Searching over common line pairs: 9%|▊ | 2656/31125 [00:08<01:38, 289.59it/s]

Searching over common line pairs: 9%|▊ | 2685/31125 [00:09<01:38, 289.32it/s]

Searching over common line pairs: 9%|▊ | 2715/31125 [00:09<01:38, 289.88it/s]

Searching over common line pairs: 9%|▉ | 2745/31125 [00:09<01:37, 290.21it/s]

Searching over common line pairs: 9%|▉ | 2775/31125 [00:09<01:37, 290.41it/s]

Searching over common line pairs: 9%|▉ | 2805/31125 [00:09<01:37, 290.15it/s]

Searching over common line pairs: 9%|▉ | 2835/31125 [00:09<01:37, 289.32it/s]

Searching over common line pairs: 9%|▉ | 2864/31125 [00:09<01:37, 289.43it/s]

Searching over common line pairs: 9%|▉ | 2893/31125 [00:09<01:37, 288.83it/s]

Searching over common line pairs: 9%|▉ | 2922/31125 [00:09<01:37, 288.93it/s]

Searching over common line pairs: 9%|▉ | 2951/31125 [00:10<01:37, 288.02it/s]

Searching over common line pairs: 10%|▉ | 2981/31125 [00:10<01:37, 288.76it/s]

Searching over common line pairs: 10%|▉ | 3011/31125 [00:10<01:37, 289.36it/s]

Searching over common line pairs: 10%|▉ | 3041/31125 [00:10<01:36, 289.77it/s]

Searching over common line pairs: 10%|▉ | 3070/31125 [00:10<01:37, 288.94it/s]

Searching over common line pairs: 10%|▉ | 3099/31125 [00:10<01:37, 288.55it/s]

Searching over common line pairs: 10%|█ | 3129/31125 [00:10<01:36, 289.20it/s]

Searching over common line pairs: 10%|█ | 3158/31125 [00:10<01:36, 288.60it/s]

Searching over common line pairs: 10%|█ | 3187/31125 [00:10<01:36, 288.04it/s]

Searching over common line pairs: 10%|█ | 3216/31125 [00:10<01:36, 288.62it/s]

Searching over common line pairs: 10%|█ | 3245/31125 [00:11<01:36, 288.83it/s]

Searching over common line pairs: 11%|█ | 3274/31125 [00:11<01:36, 288.35it/s]

Searching over common line pairs: 11%|█ | 3303/31125 [00:11<01:36, 288.56it/s]

Searching over common line pairs: 11%|█ | 3332/31125 [00:11<01:36, 288.51it/s]

Searching over common line pairs: 11%|█ | 3361/31125 [00:11<01:36, 288.79it/s]

Searching over common line pairs: 11%|█ | 3390/31125 [00:11<01:35, 288.99it/s]

Searching over common line pairs: 11%|█ | 3419/31125 [00:11<01:36, 287.94it/s]

Searching over common line pairs: 11%|█ | 3448/31125 [00:11<01:36, 288.01it/s]

Searching over common line pairs: 11%|█ | 3477/31125 [00:11<01:36, 287.82it/s]

Searching over common line pairs: 11%|█▏ | 3506/31125 [00:11<01:36, 287.46it/s]

Searching over common line pairs: 11%|█▏ | 3535/31125 [00:12<01:35, 287.96it/s]

Searching over common line pairs: 11%|█▏ | 3564/31125 [00:12<01:35, 288.36it/s]

Searching over common line pairs: 12%|█▏ | 3593/31125 [00:12<01:35, 288.54it/s]

Searching over common line pairs: 12%|█▏ | 3622/31125 [00:12<01:35, 288.32it/s]

Searching over common line pairs: 12%|█▏ | 3652/31125 [00:12<01:35, 289.02it/s]

Searching over common line pairs: 12%|█▏ | 3681/31125 [00:12<01:35, 288.77it/s]

Searching over common line pairs: 12%|█▏ | 3710/31125 [00:12<01:34, 289.05it/s]

Searching over common line pairs: 12%|█▏ | 3739/31125 [00:12<01:34, 289.24it/s]

Searching over common line pairs: 12%|█▏ | 3769/31125 [00:12<01:34, 289.51it/s]

Searching over common line pairs: 12%|█▏ | 3799/31125 [00:12<01:34, 289.80it/s]

Searching over common line pairs: 12%|█▏ | 3829/31125 [00:13<01:34, 290.29it/s]

Searching over common line pairs: 12%|█▏ | 3859/31125 [00:13<01:33, 290.68it/s]

Searching over common line pairs: 12%|█▏ | 3889/31125 [00:13<01:33, 291.14it/s]

Searching over common line pairs: 13%|█▎ | 3919/31125 [00:13<01:33, 291.33it/s]

Searching over common line pairs: 13%|█▎ | 3949/31125 [00:13<01:33, 290.28it/s]

Searching over common line pairs: 13%|█▎ | 3979/31125 [00:13<01:33, 289.31it/s]

Searching over common line pairs: 13%|█▎ | 4008/31125 [00:13<01:33, 288.90it/s]

Searching over common line pairs: 13%|█▎ | 4037/31125 [00:13<01:33, 288.87it/s]

Searching over common line pairs: 13%|█▎ | 4066/31125 [00:13<01:33, 288.37it/s]

Searching over common line pairs: 13%|█▎ | 4095/31125 [00:13<01:33, 288.34it/s]

Searching over common line pairs: 13%|█▎ | 4124/31125 [00:14<01:33, 288.50it/s]

Searching over common line pairs: 13%|█▎ | 4153/31125 [00:14<01:33, 288.16it/s]

Searching over common line pairs: 13%|█▎ | 4183/31125 [00:14<01:33, 288.94it/s]

Searching over common line pairs: 14%|█▎ | 4213/31125 [00:14<01:33, 289.30it/s]

Searching over common line pairs: 14%|█▎ | 4242/31125 [00:14<01:32, 289.50it/s]

Searching over common line pairs: 14%|█▎ | 4272/31125 [00:14<01:32, 289.66it/s]

Searching over common line pairs: 14%|█▍ | 4301/31125 [00:14<01:32, 289.76it/s]

Searching over common line pairs: 14%|█▍ | 4330/31125 [00:14<01:32, 289.65it/s]

Searching over common line pairs: 14%|█▍ | 4360/31125 [00:14<01:32, 289.79it/s]

Searching over common line pairs: 14%|█▍ | 4390/31125 [00:14<01:32, 289.88it/s]

Searching over common line pairs: 14%|█▍ | 4419/31125 [00:15<01:32, 289.65it/s]

Searching over common line pairs: 14%|█▍ | 4449/31125 [00:15<01:32, 289.84it/s]

Searching over common line pairs: 14%|█▍ | 4479/31125 [00:15<01:31, 290.21it/s]

Searching over common line pairs: 14%|█▍ | 4509/31125 [00:15<01:31, 290.72it/s]

Searching over common line pairs: 15%|█▍ | 4539/31125 [00:15<01:31, 290.84it/s]

Searching over common line pairs: 15%|█▍ | 4569/31125 [00:15<01:31, 290.54it/s]

Searching over common line pairs: 15%|█▍ | 4599/31125 [00:15<01:31, 290.23it/s]

Searching over common line pairs: 15%|█▍ | 4629/31125 [00:15<01:31, 289.86it/s]

Searching over common line pairs: 15%|█▍ | 4658/31125 [00:15<01:31, 289.43it/s]

Searching over common line pairs: 15%|█▌ | 4687/31125 [00:16<01:31, 289.54it/s]

Searching over common line pairs: 15%|█▌ | 4716/31125 [00:16<01:31, 289.18it/s]

Searching over common line pairs: 15%|█▌ | 4745/31125 [00:16<01:31, 289.16it/s]

Searching over common line pairs: 15%|█▌ | 4774/31125 [00:16<01:31, 289.02it/s]

Searching over common line pairs: 15%|█▌ | 4803/31125 [00:16<01:31, 288.54it/s]

Searching over common line pairs: 16%|█▌ | 4832/31125 [00:16<01:31, 288.54it/s]

Searching over common line pairs: 16%|█▌ | 4861/31125 [00:16<01:30, 288.66it/s]

Searching over common line pairs: 16%|█▌ | 4890/31125 [00:16<01:30, 288.57it/s]

Searching over common line pairs: 16%|█▌ | 4919/31125 [00:16<01:30, 288.28it/s]

Searching over common line pairs: 16%|█▌ | 4948/31125 [00:16<01:30, 288.50it/s]

Searching over common line pairs: 16%|█▌ | 4977/31125 [00:17<01:30, 288.91it/s]

Searching over common line pairs: 16%|█▌ | 5007/31125 [00:17<01:30, 289.35it/s]

Searching over common line pairs: 16%|█▌ | 5036/31125 [00:17<01:30, 289.07it/s]

Searching over common line pairs: 16%|█▋ | 5065/31125 [00:17<01:30, 288.94it/s]

Searching over common line pairs: 16%|█▋ | 5094/31125 [00:17<01:30, 288.66it/s]

Searching over common line pairs: 16%|█▋ | 5124/31125 [00:17<01:29, 289.40it/s]

Searching over common line pairs: 17%|█▋ | 5154/31125 [00:17<01:29, 289.97it/s]

Searching over common line pairs: 17%|█▋ | 5184/31125 [00:17<01:29, 290.18it/s]

Searching over common line pairs: 17%|█▋ | 5214/31125 [00:17<01:29, 290.46it/s]

Searching over common line pairs: 17%|█▋ | 5244/31125 [00:17<01:29, 290.50it/s]

Searching over common line pairs: 17%|█▋ | 5274/31125 [00:18<01:29, 289.62it/s]

Searching over common line pairs: 17%|█▋ | 5303/31125 [00:18<01:29, 289.53it/s]

Searching over common line pairs: 17%|█▋ | 5332/31125 [00:18<01:29, 288.82it/s]

Searching over common line pairs: 17%|█▋ | 5361/31125 [00:18<01:29, 288.92it/s]

Searching over common line pairs: 17%|█▋ | 5390/31125 [00:18<01:29, 288.66it/s]

Searching over common line pairs: 17%|█▋ | 5419/31125 [00:18<01:29, 288.81it/s]

Searching over common line pairs: 18%|█▊ | 5448/31125 [00:18<01:29, 287.79it/s]

Searching over common line pairs: 18%|█▊ | 5477/31125 [00:18<01:29, 287.35it/s]

Searching over common line pairs: 18%|█▊ | 5506/31125 [00:18<01:29, 287.74it/s]

Searching over common line pairs: 18%|█▊ | 5535/31125 [00:18<01:28, 287.98it/s]

Searching over common line pairs: 18%|█▊ | 5564/31125 [00:19<01:28, 287.62it/s]

Searching over common line pairs: 18%|█▊ | 5593/31125 [00:19<01:28, 287.86it/s]

Searching over common line pairs: 18%|█▊ | 5622/31125 [00:19<01:28, 287.74it/s]

Searching over common line pairs: 18%|█▊ | 5651/31125 [00:19<01:28, 287.29it/s]

Searching over common line pairs: 18%|█▊ | 5680/31125 [00:19<01:28, 288.06it/s]

Searching over common line pairs: 18%|█▊ | 5709/31125 [00:19<01:28, 288.10it/s]

Searching over common line pairs: 18%|█▊ | 5739/31125 [00:19<01:27, 289.03it/s]

Searching over common line pairs: 19%|█▊ | 5769/31125 [00:19<01:27, 289.49it/s]

Searching over common line pairs: 19%|█▊ | 5799/31125 [00:19<01:27, 289.77it/s]

Searching over common line pairs: 19%|█▊ | 5828/31125 [00:19<01:27, 289.79it/s]

Searching over common line pairs: 19%|█▉ | 5858/31125 [00:20<01:27, 290.01it/s]

Searching over common line pairs: 19%|█▉ | 5888/31125 [00:20<01:27, 289.90it/s]

Searching over common line pairs: 19%|█▉ | 5917/31125 [00:20<01:26, 289.81it/s]

Searching over common line pairs: 19%|█▉ | 5946/31125 [00:20<01:27, 288.89it/s]

Searching over common line pairs: 19%|█▉ | 5975/31125 [00:20<01:27, 288.31it/s]

Searching over common line pairs: 19%|█▉ | 6004/31125 [00:20<01:27, 288.22it/s]

Searching over common line pairs: 19%|█▉ | 6033/31125 [00:20<01:27, 288.29it/s]

Searching over common line pairs: 19%|█▉ | 6062/31125 [00:20<01:26, 288.46it/s]

Searching over common line pairs: 20%|█▉ | 6091/31125 [00:20<01:26, 288.42it/s]

Searching over common line pairs: 20%|█▉ | 6120/31125 [00:20<01:26, 288.07it/s]

Searching over common line pairs: 20%|█▉ | 6149/31125 [00:21<01:26, 288.46it/s]

Searching over common line pairs: 20%|█▉ | 6178/31125 [00:21<01:26, 288.25it/s]

Searching over common line pairs: 20%|█▉ | 6207/31125 [00:21<01:26, 287.32it/s]

Searching over common line pairs: 20%|██ | 6236/31125 [00:21<01:26, 286.72it/s]

Searching over common line pairs: 20%|██ | 6265/31125 [00:21<01:26, 286.44it/s]

Searching over common line pairs: 20%|██ | 6294/31125 [00:21<01:26, 287.01it/s]

Searching over common line pairs: 20%|██ | 6323/31125 [00:21<01:26, 287.20it/s]

Searching over common line pairs: 20%|██ | 6352/31125 [00:21<01:26, 287.28it/s]

Searching over common line pairs: 21%|██ | 6381/31125 [00:21<01:26, 287.53it/s]

Searching over common line pairs: 21%|██ | 6410/31125 [00:21<01:25, 287.83it/s]

Searching over common line pairs: 21%|██ | 6440/31125 [00:22<01:25, 288.61it/s]

Searching over common line pairs: 21%|██ | 6469/31125 [00:22<01:25, 288.60it/s]

Searching over common line pairs: 21%|██ | 6498/31125 [00:22<01:25, 288.39it/s]

Searching over common line pairs: 21%|██ | 6527/31125 [00:22<01:25, 288.37it/s]

Searching over common line pairs: 21%|██ | 6556/31125 [00:22<01:25, 288.78it/s]

Searching over common line pairs: 21%|██ | 6585/31125 [00:22<01:24, 288.99it/s]

Searching over common line pairs: 21%|██ | 6614/31125 [00:22<01:24, 288.84it/s]

Searching over common line pairs: 21%|██▏ | 6643/31125 [00:22<01:25, 287.89it/s]

Searching over common line pairs: 21%|██▏ | 6672/31125 [00:22<01:24, 287.84it/s]

Searching over common line pairs: 22%|██▏ | 6701/31125 [00:22<01:24, 288.37it/s]

Searching over common line pairs: 22%|██▏ | 6730/31125 [00:23<01:24, 288.47it/s]

Searching over common line pairs: 22%|██▏ | 6759/31125 [00:23<01:24, 288.56it/s]

Searching over common line pairs: 22%|██▏ | 6788/31125 [00:23<01:24, 288.34it/s]

Searching over common line pairs: 22%|██▏ | 6817/31125 [00:23<01:24, 287.94it/s]

Searching over common line pairs: 22%|██▏ | 6846/31125 [00:23<01:24, 287.92it/s]

Searching over common line pairs: 22%|██▏ | 6875/31125 [00:23<01:24, 287.72it/s]

Searching over common line pairs: 22%|██▏ | 6904/31125 [00:23<01:24, 287.51it/s]

Searching over common line pairs: 22%|██▏ | 6933/31125 [00:23<01:24, 287.53it/s]

Searching over common line pairs: 22%|██▏ | 6962/31125 [00:23<01:24, 287.18it/s]

Searching over common line pairs: 22%|██▏ | 6991/31125 [00:24<01:24, 287.00it/s]

Searching over common line pairs: 23%|██▎ | 7020/31125 [00:24<01:23, 287.08it/s]

Searching over common line pairs: 23%|██▎ | 7049/31125 [00:24<01:23, 286.89it/s]

Searching over common line pairs: 23%|██▎ | 7078/31125 [00:24<01:23, 287.19it/s]

Searching over common line pairs: 23%|██▎ | 7107/31125 [00:24<01:23, 287.25it/s]

Searching over common line pairs: 23%|██▎ | 7136/31125 [00:24<01:23, 287.63it/s]

Searching over common line pairs: 23%|██▎ | 7165/31125 [00:24<01:23, 287.65it/s]

Searching over common line pairs: 23%|██▎ | 7194/31125 [00:24<01:23, 287.96it/s]

Searching over common line pairs: 23%|██▎ | 7223/31125 [00:24<01:22, 288.21it/s]

Searching over common line pairs: 23%|██▎ | 7252/31125 [00:24<01:22, 288.74it/s]

Searching over common line pairs: 23%|██▎ | 7281/31125 [00:25<01:22, 288.43it/s]

Searching over common line pairs: 23%|██▎ | 7310/31125 [00:25<01:22, 288.52it/s]

Searching over common line pairs: 24%|██▎ | 7339/31125 [00:25<01:22, 288.67it/s]

Searching over common line pairs: 24%|██▎ | 7368/31125 [00:25<01:22, 288.97it/s]

Searching over common line pairs: 24%|██▍ | 7397/31125 [00:25<01:22, 288.14it/s]

Searching over common line pairs: 24%|██▍ | 7426/31125 [00:25<01:22, 288.37it/s]

Searching over common line pairs: 24%|██▍ | 7455/31125 [00:25<01:22, 288.55it/s]

Searching over common line pairs: 24%|██▍ | 7484/31125 [00:25<01:21, 288.31it/s]

Searching over common line pairs: 24%|██▍ | 7513/31125 [00:25<01:22, 287.91it/s]

Searching over common line pairs: 24%|██▍ | 7542/31125 [00:25<01:22, 287.37it/s]

Searching over common line pairs: 24%|██▍ | 7571/31125 [00:26<01:22, 286.74it/s]

Searching over common line pairs: 24%|██▍ | 7600/31125 [00:26<01:22, 286.87it/s]

Searching over common line pairs: 25%|██▍ | 7629/31125 [00:26<01:21, 287.29it/s]

Searching over common line pairs: 25%|██▍ | 7659/31125 [00:26<01:21, 288.21it/s]

Searching over common line pairs: 25%|██▍ | 7688/31125 [00:26<01:21, 287.93it/s]

Searching over common line pairs: 25%|██▍ | 7717/31125 [00:26<01:21, 287.67it/s]

Searching over common line pairs: 25%|██▍ | 7746/31125 [00:26<01:21, 288.36it/s]

Searching over common line pairs: 25%|██▍ | 7775/31125 [00:26<01:20, 288.73it/s]

Searching over common line pairs: 25%|██▌ | 7804/31125 [00:26<01:20, 288.77it/s]

Searching over common line pairs: 25%|██▌ | 7833/31125 [00:26<01:20, 288.84it/s]

Searching over common line pairs: 25%|██▌ | 7862/31125 [00:27<01:20, 288.87it/s]

Searching over common line pairs: 25%|██▌ | 7891/31125 [00:27<01:20, 288.53it/s]

Searching over common line pairs: 25%|██▌ | 7921/31125 [00:27<01:20, 289.35it/s]

Searching over common line pairs: 26%|██▌ | 7951/31125 [00:27<01:19, 290.04it/s]

Searching over common line pairs: 26%|██▌ | 7981/31125 [00:27<01:20, 289.10it/s]

Searching over common line pairs: 26%|██▌ | 8011/31125 [00:27<01:19, 289.52it/s]

Searching over common line pairs: 26%|██▌ | 8041/31125 [00:27<01:19, 290.28it/s]

Searching over common line pairs: 26%|██▌ | 8071/31125 [00:27<01:19, 290.33it/s]

Searching over common line pairs: 26%|██▌ | 8101/31125 [00:27<01:19, 290.41it/s]

Searching over common line pairs: 26%|██▌ | 8131/31125 [00:27<01:19, 288.97it/s]

Searching over common line pairs: 26%|██▌ | 8160/31125 [00:28<01:19, 288.66it/s]

Searching over common line pairs: 26%|██▋ | 8189/31125 [00:28<01:19, 288.79it/s]

Searching over common line pairs: 26%|██▋ | 8218/31125 [00:28<01:19, 288.17it/s]

Searching over common line pairs: 26%|██▋ | 8247/31125 [00:28<01:19, 288.43it/s]

Searching over common line pairs: 27%|██▋ | 8276/31125 [00:28<01:19, 287.83it/s]

Searching over common line pairs: 27%|██▋ | 8305/31125 [00:28<01:19, 288.30it/s]

Searching over common line pairs: 27%|██▋ | 8334/31125 [00:28<01:19, 288.40it/s]

Searching over common line pairs: 27%|██▋ | 8363/31125 [00:28<01:18, 288.25it/s]

Searching over common line pairs: 27%|██▋ | 8393/31125 [00:28<01:18, 288.88it/s]

Searching over common line pairs: 27%|██▋ | 8423/31125 [00:28<01:18, 289.54it/s]

Searching over common line pairs: 27%|██▋ | 8453/31125 [00:29<01:18, 289.87it/s]

Searching over common line pairs: 27%|██▋ | 8483/31125 [00:29<01:18, 290.17it/s]

Searching over common line pairs: 27%|██▋ | 8513/31125 [00:29<01:17, 290.21it/s]

Searching over common line pairs: 27%|██▋ | 8543/31125 [00:29<01:17, 290.00it/s]

Searching over common line pairs: 28%|██▊ | 8572/31125 [00:29<01:18, 289.11it/s]

Searching over common line pairs: 28%|██▊ | 8601/31125 [00:29<01:17, 289.15it/s]

Searching over common line pairs: 28%|██▊ | 8630/31125 [00:29<01:18, 288.28it/s]

Searching over common line pairs: 28%|██▊ | 8659/31125 [00:29<01:18, 287.98it/s]

Searching over common line pairs: 28%|██▊ | 8689/31125 [00:29<01:17, 289.03it/s]

Searching over common line pairs: 28%|██▊ | 8719/31125 [00:29<01:17, 289.64it/s]

Searching over common line pairs: 28%|██▊ | 8749/31125 [00:30<01:17, 290.02it/s]

Searching over common line pairs: 28%|██▊ | 8779/31125 [00:30<01:16, 290.33it/s]

Searching over common line pairs: 28%|██▊ | 8809/31125 [00:30<01:16, 290.50it/s]

Searching over common line pairs: 28%|██▊ | 8839/31125 [00:30<01:16, 290.67it/s]

Searching over common line pairs: 28%|██▊ | 8869/31125 [00:30<01:16, 290.34it/s]

Searching over common line pairs: 29%|██▊ | 8899/31125 [00:30<01:16, 290.16it/s]

Searching over common line pairs: 29%|██▊ | 8929/31125 [00:30<01:16, 290.21it/s]

Searching over common line pairs: 29%|██▉ | 8959/31125 [00:30<01:16, 290.12it/s]

Searching over common line pairs: 29%|██▉ | 8989/31125 [00:30<01:16, 290.37it/s]

Searching over common line pairs: 29%|██▉ | 9019/31125 [00:31<01:15, 290.96it/s]

Searching over common line pairs: 29%|██▉ | 9049/31125 [00:31<01:15, 291.15it/s]

Searching over common line pairs: 29%|██▉ | 9079/31125 [00:31<01:15, 291.10it/s]

Searching over common line pairs: 29%|██▉ | 9109/31125 [00:31<01:15, 291.24it/s]

Searching over common line pairs: 29%|██▉ | 9139/31125 [00:31<01:15, 291.23it/s]

Searching over common line pairs: 29%|██▉ | 9169/31125 [00:31<01:15, 290.89it/s]

Searching over common line pairs: 30%|██▉ | 9199/31125 [00:31<01:15, 290.45it/s]

Searching over common line pairs: 30%|██▉ | 9229/31125 [00:31<01:15, 290.71it/s]

Searching over common line pairs: 30%|██▉ | 9259/31125 [00:31<01:15, 290.86it/s]

Searching over common line pairs: 30%|██▉ | 9289/31125 [00:31<01:15, 290.68it/s]

Searching over common line pairs: 30%|██▉ | 9319/31125 [00:32<01:15, 290.54it/s]

Searching over common line pairs: 30%|███ | 9349/31125 [00:32<01:14, 290.79it/s]

Searching over common line pairs: 30%|███ | 9379/31125 [00:32<01:14, 291.00it/s]

Searching over common line pairs: 30%|███ | 9409/31125 [00:32<01:14, 290.84it/s]

Searching over common line pairs: 30%|███ | 9439/31125 [00:32<01:14, 291.29it/s]

Searching over common line pairs: 30%|███ | 9469/31125 [00:32<01:14, 290.82it/s]

Searching over common line pairs: 31%|███ | 9499/31125 [00:32<01:14, 290.71it/s]

Searching over common line pairs: 31%|███ | 9529/31125 [00:32<01:14, 290.63it/s]

Searching over common line pairs: 31%|███ | 9559/31125 [00:32<01:14, 290.76it/s]

Searching over common line pairs: 31%|███ | 9589/31125 [00:32<01:14, 290.70it/s]

Searching over common line pairs: 31%|███ | 9619/31125 [00:33<01:14, 290.41it/s]

Searching over common line pairs: 31%|███ | 9649/31125 [00:33<01:13, 290.29it/s]

Searching over common line pairs: 31%|███ | 9679/31125 [00:33<01:13, 290.72it/s]

Searching over common line pairs: 31%|███ | 9709/31125 [00:33<01:13, 290.09it/s]

Searching over common line pairs: 31%|███▏ | 9739/31125 [00:33<01:13, 289.83it/s]

Searching over common line pairs: 31%|███▏ | 9768/31125 [00:33<01:13, 289.49it/s]

Searching over common line pairs: 31%|███▏ | 9797/31125 [00:33<01:13, 289.00it/s]

Searching over common line pairs: 32%|███▏ | 9826/31125 [00:33<01:13, 289.03it/s]

Searching over common line pairs: 32%|███▏ | 9856/31125 [00:33<01:13, 289.71it/s]

Searching over common line pairs: 32%|███▏ | 9886/31125 [00:34<01:13, 289.90it/s]

Searching over common line pairs: 32%|███▏ | 9915/31125 [00:34<01:13, 289.81it/s]

Searching over common line pairs: 32%|███▏ | 9945/31125 [00:34<01:12, 290.32it/s]

Searching over common line pairs: 32%|███▏ | 9975/31125 [00:34<01:12, 290.10it/s]

Searching over common line pairs: 32%|███▏ | 10005/31125 [00:34<01:12, 289.90it/s]

Searching over common line pairs: 32%|███▏ | 10034/31125 [00:34<01:13, 288.53it/s]

Searching over common line pairs: 32%|███▏ | 10063/31125 [00:34<01:13, 288.50it/s]

Searching over common line pairs: 32%|███▏ | 10093/31125 [00:34<01:12, 289.34it/s]

Searching over common line pairs: 33%|███▎ | 10123/31125 [00:34<01:12, 289.68it/s]

Searching over common line pairs: 33%|███▎ | 10152/31125 [00:34<01:12, 289.47it/s]

Searching over common line pairs: 33%|███▎ | 10181/31125 [00:35<01:12, 289.36it/s]

Searching over common line pairs: 33%|███▎ | 10210/31125 [00:35<01:12, 289.05it/s]

Searching over common line pairs: 33%|███▎ | 10239/31125 [00:35<01:12, 288.76it/s]

Searching over common line pairs: 33%|███▎ | 10268/31125 [00:35<01:12, 288.65it/s]

Searching over common line pairs: 33%|███▎ | 10297/31125 [00:35<01:12, 288.13it/s]

Searching over common line pairs: 33%|███▎ | 10326/31125 [00:35<01:12, 288.20it/s]

Searching over common line pairs: 33%|███▎ | 10355/31125 [00:35<01:12, 287.91it/s]

Searching over common line pairs: 33%|███▎ | 10384/31125 [00:35<01:11, 288.30it/s]

Searching over common line pairs: 33%|███▎ | 10413/31125 [00:35<01:11, 288.28it/s]

Searching over common line pairs: 34%|███▎ | 10442/31125 [00:35<01:11, 288.18it/s]

Searching over common line pairs: 34%|███▎ | 10471/31125 [00:36<01:11, 288.39it/s]

Searching over common line pairs: 34%|███▎ | 10500/31125 [00:36<01:11, 288.55it/s]

Searching over common line pairs: 34%|███▍ | 10530/31125 [00:36<01:11, 288.99it/s]

Searching over common line pairs: 34%|███▍ | 10559/31125 [00:36<01:11, 289.09it/s]

Searching over common line pairs: 34%|███▍ | 10589/31125 [00:36<01:10, 289.40it/s]

Searching over common line pairs: 34%|███▍ | 10619/31125 [00:36<01:10, 289.69it/s]

Searching over common line pairs: 34%|███▍ | 10649/31125 [00:36<01:10, 290.21it/s]

Searching over common line pairs: 34%|███▍ | 10679/31125 [00:36<01:10, 289.78it/s]

Searching over common line pairs: 34%|███▍ | 10708/31125 [00:36<01:10, 289.69it/s]

Searching over common line pairs: 34%|███▍ | 10738/31125 [00:36<01:10, 289.79it/s]

Searching over common line pairs: 35%|███▍ | 10767/31125 [00:37<01:10, 289.25it/s]

Searching over common line pairs: 35%|███▍ | 10796/31125 [00:37<01:10, 287.99it/s]

Searching over common line pairs: 35%|███▍ | 10825/31125 [00:37<01:10, 287.92it/s]

Searching over common line pairs: 35%|███▍ | 10854/31125 [00:37<01:10, 288.06it/s]

Searching over common line pairs: 35%|███▍ | 10883/31125 [00:37<01:10, 288.39it/s]

Searching over common line pairs: 35%|███▌ | 10912/31125 [00:37<01:10, 288.15it/s]

Searching over common line pairs: 35%|███▌ | 10941/31125 [00:37<01:10, 287.97it/s]

Searching over common line pairs: 35%|███▌ | 10970/31125 [00:37<01:10, 287.65it/s]

Searching over common line pairs: 35%|███▌ | 10999/31125 [00:37<01:09, 287.73it/s]

Searching over common line pairs: 35%|███▌ | 11028/31125 [00:37<01:09, 288.15it/s]

Searching over common line pairs: 36%|███▌ | 11057/31125 [00:38<01:09, 288.63it/s]

Searching over common line pairs: 36%|███▌ | 11087/31125 [00:38<01:09, 289.25it/s]

Searching over common line pairs: 36%|███▌ | 11116/31125 [00:38<01:09, 289.26it/s]

Searching over common line pairs: 36%|███▌ | 11146/31125 [00:38<01:08, 289.75it/s]

Searching over common line pairs: 36%|███▌ | 11176/31125 [00:38<01:08, 289.95it/s]

Searching over common line pairs: 36%|███▌ | 11205/31125 [00:38<01:08, 289.58it/s]

Searching over common line pairs: 36%|███▌ | 11234/31125 [00:38<01:08, 289.62it/s]

Searching over common line pairs: 36%|███▌ | 11263/31125 [00:38<01:08, 289.00it/s]

Searching over common line pairs: 36%|███▋ | 11292/31125 [00:38<01:08, 289.07it/s]

Searching over common line pairs: 36%|███▋ | 11322/31125 [00:38<01:08, 289.57it/s]

Searching over common line pairs: 36%|███▋ | 11352/31125 [00:39<01:08, 289.83it/s]

Searching over common line pairs: 37%|███▋ | 11382/31125 [00:39<01:08, 289.88it/s]

Searching over common line pairs: 37%|███▋ | 11412/31125 [00:39<01:07, 290.35it/s]

Searching over common line pairs: 37%|███▋ | 11442/31125 [00:39<01:07, 289.66it/s]

Searching over common line pairs: 37%|███▋ | 11471/31125 [00:39<01:07, 289.75it/s]

Searching over common line pairs: 37%|███▋ | 11501/31125 [00:39<01:07, 290.55it/s]

Searching over common line pairs: 37%|███▋ | 11531/31125 [00:39<01:07, 290.85it/s]

Searching over common line pairs: 37%|███▋ | 11561/31125 [00:39<01:07, 289.73it/s]

Searching over common line pairs: 37%|███▋ | 11590/31125 [00:39<01:07, 289.44it/s]

Searching over common line pairs: 37%|███▋ | 11620/31125 [00:40<01:07, 289.81it/s]

Searching over common line pairs: 37%|███▋ | 11649/31125 [00:40<01:07, 289.46it/s]

Searching over common line pairs: 38%|███▊ | 11678/31125 [00:40<01:07, 289.30it/s]

Searching over common line pairs: 38%|███▊ | 11708/31125 [00:40<01:06, 289.87it/s]

Searching over common line pairs: 38%|███▊ | 11738/31125 [00:40<01:06, 290.00it/s]

Searching over common line pairs: 38%|███▊ | 11768/31125 [00:40<01:06, 290.21it/s]

Searching over common line pairs: 38%|███▊ | 11798/31125 [00:40<01:06, 289.63it/s]

Searching over common line pairs: 38%|███▊ | 11828/31125 [00:40<01:06, 289.90it/s]

Searching over common line pairs: 38%|███▊ | 11857/31125 [00:40<01:06, 289.90it/s]

Searching over common line pairs: 38%|███▊ | 11887/31125 [00:40<01:06, 290.23it/s]

Searching over common line pairs: 38%|███▊ | 11917/31125 [00:41<01:06, 290.56it/s]

Searching over common line pairs: 38%|███▊ | 11947/31125 [00:41<01:05, 291.32it/s]

Searching over common line pairs: 38%|███▊ | 11977/31125 [00:41<01:05, 292.07it/s]

Searching over common line pairs: 39%|███▊ | 12007/31125 [00:41<01:05, 292.56it/s]

Searching over common line pairs: 39%|███▊ | 12037/31125 [00:41<01:05, 291.34it/s]

Searching over common line pairs: 39%|███▉ | 12067/31125 [00:41<01:05, 290.82it/s]

Searching over common line pairs: 39%|███▉ | 12097/31125 [00:41<01:05, 290.42it/s]

Searching over common line pairs: 39%|███▉ | 12127/31125 [00:41<01:05, 289.87it/s]

Searching over common line pairs: 39%|███▉ | 12156/31125 [00:41<01:05, 289.38it/s]

Searching over common line pairs: 39%|███▉ | 12185/31125 [00:41<01:05, 289.37it/s]

Searching over common line pairs: 39%|███▉ | 12214/31125 [00:42<01:05, 289.53it/s]

Searching over common line pairs: 39%|███▉ | 12244/31125 [00:42<01:05, 290.25it/s]

Searching over common line pairs: 39%|███▉ | 12274/31125 [00:42<01:04, 290.75it/s]

Searching over common line pairs: 40%|███▉ | 12304/31125 [00:42<01:04, 291.28it/s]

Searching over common line pairs: 40%|███▉ | 12334/31125 [00:42<01:04, 291.31it/s]

Searching over common line pairs: 40%|███▉ | 12364/31125 [00:42<01:04, 290.35it/s]

Searching over common line pairs: 40%|███▉ | 12394/31125 [00:42<01:04, 289.41it/s]

Searching over common line pairs: 40%|███▉ | 12423/31125 [00:42<01:04, 288.90it/s]

Searching over common line pairs: 40%|████ | 12453/31125 [00:42<01:04, 289.54it/s]

Searching over common line pairs: 40%|████ | 12483/31125 [00:42<01:04, 290.40it/s]

Searching over common line pairs: 40%|████ | 12513/31125 [00:43<01:04, 290.58it/s]

Searching over common line pairs: 40%|████ | 12543/31125 [00:43<01:04, 290.25it/s]

Searching over common line pairs: 40%|████ | 12573/31125 [00:43<01:03, 291.39it/s]

Searching over common line pairs: 40%|████ | 12603/31125 [00:43<01:03, 291.96it/s]

Searching over common line pairs: 41%|████ | 12633/31125 [00:43<01:03, 292.99it/s]

Searching over common line pairs: 41%|████ | 12663/31125 [00:43<01:03, 292.82it/s]

Searching over common line pairs: 41%|████ | 12693/31125 [00:43<01:03, 290.99it/s]

Searching over common line pairs: 41%|████ | 12723/31125 [00:43<01:04, 285.78it/s]

Searching over common line pairs: 41%|████ | 12752/31125 [00:43<01:04, 283.62it/s]

Searching over common line pairs: 41%|████ | 12782/31125 [00:44<01:03, 286.64it/s]

Searching over common line pairs: 41%|████ | 12812/31125 [00:44<01:03, 288.87it/s]

Searching over common line pairs: 41%|████▏ | 12842/31125 [00:44<01:02, 291.47it/s]

Searching over common line pairs: 41%|████▏ | 12872/31125 [00:44<01:02, 292.30it/s]

Searching over common line pairs: 41%|████▏ | 12902/31125 [00:44<01:02, 292.75it/s]

Searching over common line pairs: 42%|████▏ | 12932/31125 [00:44<01:02, 293.27it/s]

Searching over common line pairs: 42%|████▏ | 12962/31125 [00:44<01:01, 294.25it/s]

Searching over common line pairs: 42%|████▏ | 12992/31125 [00:44<01:01, 293.93it/s]

Searching over common line pairs: 42%|████▏ | 13022/31125 [00:44<01:01, 294.03it/s]

Searching over common line pairs: 42%|████▏ | 13052/31125 [00:44<01:01, 293.39it/s]

Searching over common line pairs: 42%|████▏ | 13082/31125 [00:45<01:01, 293.32it/s]

Searching over common line pairs: 42%|████▏ | 13112/31125 [00:45<01:01, 293.03it/s]

Searching over common line pairs: 42%|████▏ | 13142/31125 [00:45<01:01, 292.93it/s]

Searching over common line pairs: 42%|████▏ | 13172/31125 [00:45<01:01, 293.23it/s]

Searching over common line pairs: 42%|████▏ | 13202/31125 [00:45<01:01, 293.05it/s]

Searching over common line pairs: 43%|████▎ | 13232/31125 [00:45<01:01, 293.11it/s]

Searching over common line pairs: 43%|████▎ | 13262/31125 [00:45<01:00, 293.09it/s]

Searching over common line pairs: 43%|████▎ | 13292/31125 [00:45<01:00, 292.87it/s]

Searching over common line pairs: 43%|████▎ | 13322/31125 [00:45<01:00, 292.72it/s]

Searching over common line pairs: 43%|████▎ | 13352/31125 [00:45<01:00, 293.12it/s]

Searching over common line pairs: 43%|████▎ | 13382/31125 [00:46<01:00, 291.98it/s]

Searching over common line pairs: 43%|████▎ | 13412/31125 [00:46<01:00, 292.18it/s]

Searching over common line pairs: 43%|████▎ | 13442/31125 [00:46<01:00, 292.01it/s]

Searching over common line pairs: 43%|████▎ | 13472/31125 [00:46<01:00, 292.53it/s]

Searching over common line pairs: 43%|████▎ | 13502/31125 [00:46<01:00, 293.24it/s]

Searching over common line pairs: 43%|████▎ | 13532/31125 [00:46<01:00, 292.98it/s]

Searching over common line pairs: 44%|████▎ | 13562/31125 [00:46<00:59, 293.03it/s]

Searching over common line pairs: 44%|████▎ | 13592/31125 [00:46<00:59, 292.97it/s]

Searching over common line pairs: 44%|████▍ | 13622/31125 [00:46<00:59, 293.30it/s]

Searching over common line pairs: 44%|████▍ | 13652/31125 [00:46<00:59, 292.74it/s]

Searching over common line pairs: 44%|████▍ | 13682/31125 [00:47<00:59, 292.70it/s]

Searching over common line pairs: 44%|████▍ | 13712/31125 [00:47<00:59, 293.45it/s]

Searching over common line pairs: 44%|████▍ | 13742/31125 [00:47<00:59, 293.13it/s]

Searching over common line pairs: 44%|████▍ | 13772/31125 [00:47<00:59, 293.10it/s]

Searching over common line pairs: 44%|████▍ | 13802/31125 [00:47<00:59, 292.97it/s]

Searching over common line pairs: 44%|████▍ | 13832/31125 [00:47<00:59, 293.06it/s]

Searching over common line pairs: 45%|████▍ | 13862/31125 [00:47<00:58, 292.83it/s]

Searching over common line pairs: 45%|████▍ | 13892/31125 [00:47<00:58, 292.58it/s]

Searching over common line pairs: 45%|████▍ | 13922/31125 [00:47<00:58, 292.29it/s]

Searching over common line pairs: 45%|████▍ | 13952/31125 [00:48<00:58, 291.72it/s]

Searching over common line pairs: 45%|████▍ | 13982/31125 [00:48<00:58, 291.40it/s]

Searching over common line pairs: 45%|████▌ | 14012/31125 [00:48<00:58, 291.75it/s]

Searching over common line pairs: 45%|████▌ | 14042/31125 [00:48<00:58, 292.35it/s]

Searching over common line pairs: 45%|████▌ | 14072/31125 [00:48<00:58, 292.26it/s]

Searching over common line pairs: 45%|████▌ | 14102/31125 [00:48<00:58, 291.82it/s]

Searching over common line pairs: 45%|████▌ | 14132/31125 [00:48<00:58, 291.94it/s]

Searching over common line pairs: 46%|████▌ | 14162/31125 [00:48<00:58, 291.71it/s]

Searching over common line pairs: 46%|████▌ | 14192/31125 [00:48<00:57, 292.63it/s]

Searching over common line pairs: 46%|████▌ | 14222/31125 [00:48<00:57, 292.41it/s]

Searching over common line pairs: 46%|████▌ | 14252/31125 [00:49<00:57, 292.85it/s]

Searching over common line pairs: 46%|████▌ | 14282/31125 [00:49<00:57, 292.15it/s]

Searching over common line pairs: 46%|████▌ | 14312/31125 [00:49<00:57, 293.47it/s]

Searching over common line pairs: 46%|████▌ | 14342/31125 [00:49<00:57, 294.04it/s]

Searching over common line pairs: 46%|████▌ | 14372/31125 [00:49<00:57, 293.46it/s]

Searching over common line pairs: 46%|████▋ | 14402/31125 [00:49<00:57, 293.13it/s]

Searching over common line pairs: 46%|████▋ | 14432/31125 [00:49<00:57, 292.30it/s]

Searching over common line pairs: 46%|████▋ | 14462/31125 [00:49<00:56, 292.34it/s]

Searching over common line pairs: 47%|████▋ | 14492/31125 [00:49<00:56, 292.51it/s]

Searching over common line pairs: 47%|████▋ | 14522/31125 [00:49<00:56, 292.52it/s]

Searching over common line pairs: 47%|████▋ | 14552/31125 [00:50<00:56, 292.07it/s]

Searching over common line pairs: 47%|████▋ | 14582/31125 [00:50<00:56, 291.47it/s]

Searching over common line pairs: 47%|████▋ | 14612/31125 [00:50<00:56, 290.85it/s]

Searching over common line pairs: 47%|████▋ | 14642/31125 [00:50<00:56, 291.01it/s]

Searching over common line pairs: 47%|████▋ | 14672/31125 [00:50<00:56, 291.18it/s]

Searching over common line pairs: 47%|████▋ | 14702/31125 [00:50<00:56, 290.08it/s]

Searching over common line pairs: 47%|████▋ | 14732/31125 [00:50<00:56, 290.26it/s]

Searching over common line pairs: 47%|████▋ | 14762/31125 [00:50<00:56, 289.70it/s]

Searching over common line pairs: 48%|████▊ | 14792/31125 [00:50<00:56, 290.25it/s]

Searching over common line pairs: 48%|████▊ | 14822/31125 [00:50<00:56, 290.19it/s]

Searching over common line pairs: 48%|████▊ | 14852/31125 [00:51<00:56, 290.48it/s]

Searching over common line pairs: 48%|████▊ | 14882/31125 [00:51<00:56, 289.65it/s]

Searching over common line pairs: 48%|████▊ | 14912/31125 [00:51<00:55, 290.09it/s]

Searching over common line pairs: 48%|████▊ | 14942/31125 [00:51<00:55, 289.83it/s]

Searching over common line pairs: 48%|████▊ | 14971/31125 [00:51<00:55, 289.72it/s]

Searching over common line pairs: 48%|████▊ | 15000/31125 [00:51<00:55, 289.65it/s]

Searching over common line pairs: 48%|████▊ | 15029/31125 [00:51<00:55, 289.21it/s]

Searching over common line pairs: 48%|████▊ | 15058/31125 [00:51<00:55, 289.39it/s]

Searching over common line pairs: 48%|████▊ | 15087/31125 [00:51<00:55, 288.75it/s]

Searching over common line pairs: 49%|████▊ | 15116/31125 [00:52<00:55, 288.86it/s]

Searching over common line pairs: 49%|████▊ | 15145/31125 [00:52<00:55, 288.99it/s]

Searching over common line pairs: 49%|████▉ | 15175/31125 [00:52<00:55, 289.63it/s]

Searching over common line pairs: 49%|████▉ | 15205/31125 [00:52<00:54, 290.00it/s]

Searching over common line pairs: 49%|████▉ | 15235/31125 [00:52<00:54, 289.99it/s]

Searching over common line pairs: 49%|████▉ | 15265/31125 [00:52<00:54, 290.13it/s]

Searching over common line pairs: 49%|████▉ | 15295/31125 [00:52<00:54, 289.22it/s]

Searching over common line pairs: 49%|████▉ | 15324/31125 [00:52<00:54, 289.44it/s]

Searching over common line pairs: 49%|████▉ | 15353/31125 [00:52<00:54, 288.63it/s]

Searching over common line pairs: 49%|████▉ | 15383/31125 [00:52<00:54, 289.11it/s]

Searching over common line pairs: 50%|████▉ | 15413/31125 [00:53<00:54, 289.46it/s]

Searching over common line pairs: 50%|████▉ | 15443/31125 [00:53<00:54, 289.85it/s]

Searching over common line pairs: 50%|████▉ | 15472/31125 [00:53<00:54, 289.82it/s]

Searching over common line pairs: 50%|████▉ | 15501/31125 [00:53<00:53, 289.75it/s]

Searching over common line pairs: 50%|████▉ | 15530/31125 [00:53<00:53, 289.71it/s]

Searching over common line pairs: 50%|████▉ | 15560/31125 [00:53<00:53, 289.84it/s]

Searching over common line pairs: 50%|█████ | 15590/31125 [00:53<00:53, 289.91it/s]

Searching over common line pairs: 50%|█████ | 15620/31125 [00:53<00:53, 290.25it/s]

Searching over common line pairs: 50%|█████ | 15650/31125 [00:53<00:53, 290.02it/s]

Searching over common line pairs: 50%|█████ | 15680/31125 [00:53<00:53, 290.04it/s]

Searching over common line pairs: 50%|█████ | 15710/31125 [00:54<00:53, 290.32it/s]

Searching over common line pairs: 51%|█████ | 15740/31125 [00:54<00:52, 290.66it/s]

Searching over common line pairs: 51%|█████ | 15770/31125 [00:54<00:52, 291.05it/s]

Searching over common line pairs: 51%|█████ | 15800/31125 [00:54<00:52, 291.34it/s]

Searching over common line pairs: 51%|█████ | 15830/31125 [00:54<00:52, 291.79it/s]

Searching over common line pairs: 51%|█████ | 15860/31125 [00:54<00:52, 291.88it/s]

Searching over common line pairs: 51%|█████ | 15890/31125 [00:54<00:52, 291.66it/s]

Searching over common line pairs: 51%|█████ | 15920/31125 [00:54<00:52, 290.96it/s]

Searching over common line pairs: 51%|█████ | 15950/31125 [00:54<00:52, 290.95it/s]

Searching over common line pairs: 51%|█████▏ | 15980/31125 [00:54<00:52, 290.86it/s]

Searching over common line pairs: 51%|█████▏ | 16010/31125 [00:55<00:52, 290.60it/s]

Searching over common line pairs: 52%|█████▏ | 16040/31125 [00:55<00:51, 290.85it/s]

Searching over common line pairs: 52%|█████▏ | 16070/31125 [00:55<00:51, 290.85it/s]

Searching over common line pairs: 52%|█████▏ | 16100/31125 [00:55<00:51, 290.85it/s]

Searching over common line pairs: 52%|█████▏ | 16130/31125 [00:55<00:51, 290.57it/s]

Searching over common line pairs: 52%|█████▏ | 16160/31125 [00:55<00:51, 290.85it/s]

Searching over common line pairs: 52%|█████▏ | 16190/31125 [00:55<00:51, 291.48it/s]

Searching over common line pairs: 52%|█████▏ | 16220/31125 [00:55<00:51, 291.99it/s]

Searching over common line pairs: 52%|█████▏ | 16250/31125 [00:55<00:50, 291.90it/s]

Searching over common line pairs: 52%|█████▏ | 16280/31125 [00:56<00:50, 292.00it/s]

Searching over common line pairs: 52%|█████▏ | 16310/31125 [00:56<00:50, 291.88it/s]

Searching over common line pairs: 52%|█████▏ | 16340/31125 [00:56<00:50, 291.51it/s]

Searching over common line pairs: 53%|█████▎ | 16370/31125 [00:56<00:50, 291.61it/s]

Searching over common line pairs: 53%|█████▎ | 16400/31125 [00:56<00:50, 291.43it/s]

Searching over common line pairs: 53%|█████▎ | 16430/31125 [00:56<00:50, 291.24it/s]

Searching over common line pairs: 53%|█████▎ | 16460/31125 [00:56<00:50, 291.63it/s]

Searching over common line pairs: 53%|█████▎ | 16490/31125 [00:56<00:50, 291.65it/s]

Searching over common line pairs: 53%|█████▎ | 16520/31125 [00:56<00:50, 291.25it/s]

Searching over common line pairs: 53%|█████▎ | 16550/31125 [00:56<00:50, 291.35it/s]

Searching over common line pairs: 53%|█████▎ | 16580/31125 [00:57<00:49, 291.48it/s]

Searching over common line pairs: 53%|█████▎ | 16610/31125 [00:57<00:49, 291.28it/s]

Searching over common line pairs: 53%|█████▎ | 16640/31125 [00:57<00:49, 291.09it/s]

Searching over common line pairs: 54%|█████▎ | 16670/31125 [00:57<00:49, 291.23it/s]

Searching over common line pairs: 54%|█████▎ | 16700/31125 [00:57<00:49, 291.45it/s]

Searching over common line pairs: 54%|█████▍ | 16730/31125 [00:57<00:49, 291.09it/s]

Searching over common line pairs: 54%|█████▍ | 16760/31125 [00:57<00:49, 290.55it/s]

Searching over common line pairs: 54%|█████▍ | 16790/31125 [00:57<00:49, 290.76it/s]

Searching over common line pairs: 54%|█████▍ | 16820/31125 [00:57<00:49, 290.99it/s]

Searching over common line pairs: 54%|█████▍ | 16850/31125 [00:57<00:48, 291.75it/s]

Searching over common line pairs: 54%|█████▍ | 16880/31125 [00:58<00:48, 290.76it/s]

Searching over common line pairs: 54%|█████▍ | 16910/31125 [00:58<00:48, 290.42it/s]

Searching over common line pairs: 54%|█████▍ | 16940/31125 [00:58<00:48, 290.74it/s]

Searching over common line pairs: 55%|█████▍ | 16970/31125 [00:58<00:48, 290.79it/s]

Searching over common line pairs: 55%|█████▍ | 17000/31125 [00:58<00:48, 290.72it/s]

Searching over common line pairs: 55%|█████▍ | 17030/31125 [00:58<00:48, 291.26it/s]

Searching over common line pairs: 55%|█████▍ | 17060/31125 [00:58<00:48, 291.54it/s]

Searching over common line pairs: 55%|█████▍ | 17090/31125 [00:58<00:48, 292.17it/s]

Searching over common line pairs: 55%|█████▌ | 17120/31125 [00:58<00:47, 292.74it/s]

Searching over common line pairs: 55%|█████▌ | 17150/31125 [00:58<00:47, 292.62it/s]

Searching over common line pairs: 55%|█████▌ | 17180/31125 [00:59<00:47, 293.15it/s]

Searching over common line pairs: 55%|█████▌ | 17210/31125 [00:59<00:47, 293.63it/s]

Searching over common line pairs: 55%|█████▌ | 17240/31125 [00:59<00:47, 294.06it/s]

Searching over common line pairs: 55%|█████▌ | 17270/31125 [00:59<00:47, 293.08it/s]

Searching over common line pairs: 56%|█████▌ | 17300/31125 [00:59<00:47, 292.57it/s]

Searching over common line pairs: 56%|█████▌ | 17330/31125 [00:59<00:47, 291.97it/s]

Searching over common line pairs: 56%|█████▌ | 17360/31125 [00:59<00:47, 292.42it/s]

Searching over common line pairs: 56%|█████▌ | 17390/31125 [00:59<00:47, 291.72it/s]

Searching over common line pairs: 56%|█████▌ | 17420/31125 [00:59<00:47, 291.08it/s]

Searching over common line pairs: 56%|█████▌ | 17450/31125 [01:00<00:47, 290.65it/s]

Searching over common line pairs: 56%|█████▌ | 17480/31125 [01:00<00:47, 290.07it/s]

Searching over common line pairs: 56%|█████▋ | 17510/31125 [01:00<00:46, 290.12it/s]

Searching over common line pairs: 56%|█████▋ | 17540/31125 [01:00<00:46, 289.60it/s]

Searching over common line pairs: 56%|█████▋ | 17570/31125 [01:00<00:46, 290.09it/s]

Searching over common line pairs: 57%|█████▋ | 17600/31125 [01:00<00:46, 290.73it/s]

Searching over common line pairs: 57%|█████▋ | 17630/31125 [01:00<00:46, 290.61it/s]

Searching over common line pairs: 57%|█████▋ | 17660/31125 [01:00<00:46, 289.90it/s]

Searching over common line pairs: 57%|█████▋ | 17690/31125 [01:00<00:46, 290.70it/s]

Searching over common line pairs: 57%|█████▋ | 17720/31125 [01:00<00:46, 291.01it/s]

Searching over common line pairs: 57%|█████▋ | 17750/31125 [01:01<00:45, 291.67it/s]

Searching over common line pairs: 57%|█████▋ | 17780/31125 [01:01<00:45, 291.33it/s]

Searching over common line pairs: 57%|█████▋ | 17810/31125 [01:01<00:45, 291.30it/s]

Searching over common line pairs: 57%|█████▋ | 17840/31125 [01:01<00:45, 291.01it/s]

Searching over common line pairs: 57%|█████▋ | 17870/31125 [01:01<00:45, 291.19it/s]

Searching over common line pairs: 58%|█████▊ | 17900/31125 [01:01<00:45, 290.81it/s]

Searching over common line pairs: 58%|█████▊ | 17930/31125 [01:01<00:45, 290.25it/s]

Searching over common line pairs: 58%|█████▊ | 17960/31125 [01:01<00:45, 290.67it/s]

Searching over common line pairs: 58%|█████▊ | 17990/31125 [01:01<00:45, 290.61it/s]

Searching over common line pairs: 58%|█████▊ | 18020/31125 [01:01<00:45, 291.00it/s]

Searching over common line pairs: 58%|█████▊ | 18050/31125 [01:02<00:44, 291.69it/s]

Searching over common line pairs: 58%|█████▊ | 18080/31125 [01:02<00:44, 292.60it/s]

Searching over common line pairs: 58%|█████▊ | 18110/31125 [01:02<00:44, 292.48it/s]

Searching over common line pairs: 58%|█████▊ | 18140/31125 [01:02<00:44, 291.57it/s]

Searching over common line pairs: 58%|█████▊ | 18170/31125 [01:02<00:44, 291.69it/s]

Searching over common line pairs: 58%|█████▊ | 18200/31125 [01:02<00:44, 291.30it/s]

Searching over common line pairs: 59%|█████▊ | 18230/31125 [01:02<00:44, 291.11it/s]

Searching over common line pairs: 59%|█████▊ | 18260/31125 [01:02<00:44, 290.31it/s]

Searching over common line pairs: 59%|█████▉ | 18290/31125 [01:02<00:44, 289.78it/s]

Searching over common line pairs: 59%|█████▉ | 18319/31125 [01:03<00:44, 289.03it/s]

Searching over common line pairs: 59%|█████▉ | 18348/31125 [01:03<00:44, 288.85it/s]

Searching over common line pairs: 59%|█████▉ | 18377/31125 [01:03<00:44, 288.98it/s]

Searching over common line pairs: 59%|█████▉ | 18406/31125 [01:03<00:44, 289.03it/s]

Searching over common line pairs: 59%|█████▉ | 18436/31125 [01:03<00:43, 290.13it/s]

Searching over common line pairs: 59%|█████▉ | 18466/31125 [01:03<00:43, 290.34it/s]

Searching over common line pairs: 59%|█████▉ | 18496/31125 [01:03<00:43, 289.76it/s]

Searching over common line pairs: 60%|█████▉ | 18526/31125 [01:03<00:43, 290.16it/s]

Searching over common line pairs: 60%|█████▉ | 18556/31125 [01:03<00:43, 290.59it/s]

Searching over common line pairs: 60%|█████▉ | 18586/31125 [01:03<00:43, 289.95it/s]

Searching over common line pairs: 60%|█████▉ | 18615/31125 [01:04<00:43, 289.44it/s]

Searching over common line pairs: 60%|█████▉ | 18645/31125 [01:04<00:43, 289.83it/s]

Searching over common line pairs: 60%|██████ | 18675/31125 [01:04<00:42, 290.29it/s]

Searching over common line pairs: 60%|██████ | 18705/31125 [01:04<00:42, 290.43it/s]

Searching over common line pairs: 60%|██████ | 18735/31125 [01:04<00:42, 290.27it/s]

Searching over common line pairs: 60%|██████ | 18765/31125 [01:04<00:42, 290.82it/s]

Searching over common line pairs: 60%|██████ | 18795/31125 [01:04<00:42, 290.98it/s]

Searching over common line pairs: 60%|██████ | 18825/31125 [01:04<00:42, 290.69it/s]

Searching over common line pairs: 61%|██████ | 18855/31125 [01:04<00:42, 290.86it/s]

Searching over common line pairs: 61%|██████ | 18885/31125 [01:04<00:42, 290.78it/s]

Searching over common line pairs: 61%|██████ | 18915/31125 [01:05<00:41, 291.35it/s]

Searching over common line pairs: 61%|██████ | 18945/31125 [01:05<00:41, 291.63it/s]

Searching over common line pairs: 61%|██████ | 18975/31125 [01:05<00:41, 291.82it/s]

Searching over common line pairs: 61%|██████ | 19005/31125 [01:05<00:41, 292.40it/s]

Searching over common line pairs: 61%|██████ | 19035/31125 [01:05<00:41, 292.85it/s]

Searching over common line pairs: 61%|██████▏ | 19065/31125 [01:05<00:41, 292.72it/s]

Searching over common line pairs: 61%|██████▏ | 19095/31125 [01:05<00:41, 290.59it/s]

Searching over common line pairs: 61%|██████▏ | 19125/31125 [01:05<00:41, 290.89it/s]

Searching over common line pairs: 62%|██████▏ | 19155/31125 [01:05<00:41, 290.08it/s]

Searching over common line pairs: 62%|██████▏ | 19185/31125 [01:05<00:41, 290.37it/s]

Searching over common line pairs: 62%|██████▏ | 19215/31125 [01:06<00:41, 290.42it/s]

Searching over common line pairs: 62%|██████▏ | 19245/31125 [01:06<00:40, 290.12it/s]

Searching over common line pairs: 62%|██████▏ | 19275/31125 [01:06<00:40, 290.69it/s]

Searching over common line pairs: 62%|██████▏ | 19305/31125 [01:06<00:40, 291.16it/s]

Searching over common line pairs: 62%|██████▏ | 19335/31125 [01:06<00:40, 289.29it/s]

Searching over common line pairs: 62%|██████▏ | 19365/31125 [01:06<00:40, 290.13it/s]

Searching over common line pairs: 62%|██████▏ | 19395/31125 [01:06<00:40, 290.63it/s]

Searching over common line pairs: 62%|██████▏ | 19425/31125 [01:06<00:40, 290.94it/s]

Searching over common line pairs: 63%|██████▎ | 19455/31125 [01:06<00:40, 291.53it/s]

Searching over common line pairs: 63%|██████▎ | 19485/31125 [01:07<00:39, 291.40it/s]

Searching over common line pairs: 63%|██████▎ | 19515/31125 [01:07<00:39, 291.19it/s]

Searching over common line pairs: 63%|██████▎ | 19545/31125 [01:07<00:39, 291.33it/s]

Searching over common line pairs: 63%|██████▎ | 19575/31125 [01:07<00:39, 291.18it/s]

Searching over common line pairs: 63%|██████▎ | 19605/31125 [01:07<00:39, 291.31it/s]